By Deep Climate

Today I continue my examination of the key analysis section of the Wegman report on the Mann et al “hockey stick” temperature reconstruction, which uncritically rehashed Steve McIntyre and Ross McKitrick’s purported demonstration of the extreme biasing effect of Mann et al’s “short-centered” principal component analysis.

First, I’ll fill in some much needed context as an antidote to McIntyre and McKitrick’s misleading focus on Mann et al’s use of principal components analysis (PCA) in data preprocessing of tree-ring proxy networks. Their problematic analysis was compounded by Wegman et al’s refusal to even consider all subsequent peer reviewed commentary – commentary that clearly demonstrated that correction of Mann et al’s “short-centered” PCA had minimal impact on the overall reconstruction.

Next, I’ll look at Wegman et al’s “reproduction” of McIntyre and McKitrick’s simulation of Mann et al’s PCA methodology, published in the pair’s 2005 Geophysical Research Letters article, Hockey sticks, principal components, and spurious significance). It turns out that the sample leading principal components (PC1s) shown in two key Wegman et al figures were in fact rendered directly from McIntyre and McKitrick’s original archive of simulated “hockey stick” PC1s. Even worse, though, is the astonishing fact that this special collection of “hockey sticks” is not even a random sample of the 10,000 pseudo-proxy PC1s originally produced in the GRL study. Rather it expressly contains the very top 100 – one percent – having the most pronounced upward blade. Thus, McIntyre and McKitrick’s original Fig 1-1, mechanically reproduced by Wegman et al, shows a carefully selected “sample” from the top 1% of simulated “hockey sticks”. And Wegman’s Fig 4-4, which falsely claimed to show “hockey sticks” mined from low-order, low-autocorrelation “red noise”, contains another 12 from that same 1%!

Finally, I’ll return to the central claim of Wegman et al – that McIntyre and McKitrick had shown that Michael Mann’s “short-centred” principal component analysis would mine “hockey sticks”, even from low-order, low-correlation “red noise” proxies . But both the source code and the hard-wired “hockey stick” figures clearly confirm what physicist David Ritson pointed out more than four years ago, namely that McIntyre and McKitrick’s “compelling” result was in fact based on a highly questionable procedure that generated null proxies with very high auto-correlation and persistence. All these facts are clear from even a cursory examination of McIntyre’s source code, demonstrating once and for all the incompetence and lack of due diligence exhibited by the Wegman report authors.

Before diving into the details of Wegman et al’s misbegotten analysis, it’s worth reminding readers of the context of principal component analysis (PCA) in the Mann et al methodology of multi-proxy temperature reconstruction.

First of all, it should be noted that PCA was used in data pre-processing to reduce large sub-networks of tree-ring proxies to a manageable number of representative principal components, which were then combined with other proxies in the final reconstruction. The PCs retained together reflect the climatological information in the proxy sub-network; the actual number required depends on the size of the sub-network (which itself changes depending on the time period or “step” under consideration). But it also depends on the details of PCA procedure itself.

In their 2005 GRL article, McIntyre and McKitrick (hereafter M&M) purported to have discovered a major flaw in the Mann et al methodology. Instead of standardizing each proxy series on the mean of the whole series before transforming into a set of PCs, Mann et al standardized on the mean during the instrumental calibration period. McIntyre and McKitrick claimed that the “short-centred” method, when applied to so-called “persistent red noise”, nearly always produces a hockey stick shaped first principal component (PC1). Furthermore, M&M focused on the PC1 produced for the North American (NOAMER) tree ring proxy sub-network in the 1400-1450 “step” of the MBH reconstruction. According to M&M, this PC1 was “essential” to the overall reconstruction; thus its “correction” demonstrated that the original “hockeystick” reconstruction was “spurious”.

While M&M clearly identified a mistake (or, at best, a poor methodological choice), critiques of M&M pointed out two major problems with the M&M analysis. First, it turned out that M&M had left out a crucial step in their emulation of the MBH PCA methodology, namely the restandardization of proxy series prior to transformation (an issue first raised in Peter Huybers’s published comment). Second, and even more importantly, M&M had neglected to assess the overall impact of any bias engendered by Mann et al’s PCA. In particular, they failed to take into account any criterion for the number of PCs to be retained in the dimensionality reduction step, as well as the the impact of combining all of the retained PCs with the other proxies.

These issues (and more) were treated comprehensively by Wahl and Ammann’s Robustness of the Mann, Bradley, Hughes reconstruction of Northern Hemisphere surface temperatures (Climatic Change, 2007), a paper that was first in press and available online in early 2006. They found that variants of PCA applied to NOAMER tree-ring network had minimal impact on the final reconstruction, as long as the common climatological information in the proxy set was retained. In effect, “short-centered” PCA may have promoted “hockey stick” patterns in the proxy data to higher PCs, but these patterns were still present if all the PCs necessary to account for sufficient explained variance were retained. This paper, along with several others, was cited by the National Research Council’s comprehensive report on paleclimatology as demonstrating that the “MBH methodology does not appear to unduly influence reconstructions of hemispheric mean temperature” (Surface Temperature Reconstructions for the Last 2,000 Years, p. 113).

But, as we have seen before, Wegman et al deliberately excluded substantive consideration of the peer-reviewed literature in their analysis of M&M, even misrepresenting the work of Wahl and Ammann in a flimsy excuse to exclude it from substantive consideration.

MM05a was critiqued by Wahl and Ammann (2006) and the Wahl et al. (2006) based on the lack of statistical skill of their paleoclimate temperature reconstruction. Thus these critiques of the MM05a and MM05b work are not to the point.

Instead, Wegman et al appeared to take at face value all of M&M’s assertions, including the conflation of the NOAMER PC1 with the overall reconstruction, as is very clear from the reproduction of M&M Fig. 1 in Wegman et al 4.1.

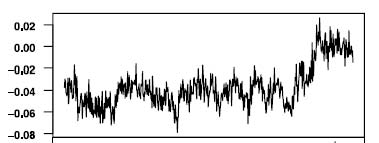

Figure 4.1: Reproduced version of Figure 1 in McIntyre and McKitrick (2005b). Top panel is PC1 simulated using MBH 98 methodology from stationary trendless red noise. Bottom panel is the MBH98 Northern Hemisphere temperature index reconstruction.

Figure 4.1: Reproduced version of Figure 1 in McIntyre and McKitrick (2005b). Top panel is PC1 simulated using MBH 98 methodology from stationary trendless red noise. Bottom panel is the MBH98 Northern Hemisphere temperature index reconstruction.

Discussion: The similarity in shapes is obvious. As mentioned earlier, red noise exhibits a correlation structure, which, although it is a stationary process, to will depart from the zero mean for minor sojourns. However, the top panel clearly exhibits the hockey stick behavior induced by the MBH98 methodology.

Now let’s dig into the implications of that figure and the subsequent “hockey stick” festival of Fig 4.4, in light of a review of McIntyre’s R script, archived as part of the available supplementary information of M&M 2005 at the AGU website. Recall that M&M had created 10,000 simulations of the NOAMER PC1 from null proxy sets created from “persistent red noise” (mistakenly assumed to be conventional red noise by Wegman et al, as seen above). This type of Monte Carlo test is often used to benchmark temperature reconstructions, although McIntyre’s method of creating “random” null proxies was unusual and highly questionable, as we shall see.

The first thing I noted in my original discussion was that, although the PC1 shown was supposedly a “sample”, it was identical in both the M&M and Wegman et al figures. How did that happen? A quick peek at the code gives the answer – this PC1 is read from an archived set of PC1s previously stored, and is #71 of the set. The relevant lines reads:

hockeysticks<-read.table(file.path(url.source,"hockeysticks.txt"), skip=1) ... plot(hockeysticks[,71],axes=FALSE,type="l",ylab="", font.lab=2)

Sure enough, the M&M ReadMe confirms this:

2004GL021750-hockeysticks.txt. Collation of 100 out of 10000 simulated PC1s, dimension 581 x 100

The eagle-eyed reader will note the code doesn’t have the prepended archive name, one error among many that Wegman et al would have had to correct with McIntyre’s assistance (the same mistakes that Peter Huybers had fixed, apparently on his own, some months before in his version of the script). Indeed, the script saves a set 100 simulation PC1s every time, and so this archived set is a particular group that was saved at some point.

However, the more interesting question is this: Exactly how was this sample of 100 hockey stick PC1s selected from the 10,000? That too is answered in the script code.

As the simulation progresses, statistics are gathered on each and every simulated PC1. One of those statistics is McIntyre’s so-called “hockey stick index” or HSI, which in effect measures the length of the blade of each PC1 “hockeystick”. An HSI of 1 means that the mean of the calibration period portion of the series is 1 standard deviation above the mean of the whole series.

The HSI for each PC1 (themselves numbered from 1 to 10,000) is stored in an array called stat2, as seen in this code snippet that renders the overall results.

#HOCKEY STICK FIGURES CITED IN PAPER #MANNOMATIC #simulations are not hard-wired and results will differ slightly in each run #the values shown here are for a run of 10,000 and results for run of 100 need to be multiplied temp<-(stat2>1)|(stat2< -1); sum(temp) #[1] 9934 temp<-(stat2>1.5)|(stat2< -1.5); sum(temp) #[1] 7351 temp<-(stat2>1.75)|(stat2< -1.75);sum(temp) #[1] 2053 temp<-(stat2>2)|(stat2< -2); sum(temp) #[1] 25

The comments show that more than 99% of the PCs have a hockey stick index with an absolute value greater than 1, and a few even have the extreme values of 2.

Now, was a random sample of these PC1s saved? Or perhaps just the first 100 (which would also be reasonably random)? Not quite.

############################################

#SAVE A SELECTION OF HOCKEY STICK SERIES IN ASCII FORMAT

order.stat<-order(stat2,decreasing=TRUE)[1:100]

order.stat<-sort(order.stat)

hockeysticks<-NULL

for (nn in 1:NN) {

load(file.path(temp.directory,paste("arfima.sim",nn,"tab",sep=".")))

index<-order.stat[!is.na(match(order.stat,(1:1000)+(nn-1)*1000))]

index<-index-(nn-1)*1000

hockeysticks<-cbind(hockeysticks,Eigen0[[3]][,index])

} #nn-iteration

dimnames(hockeysticks)[[2]]<-paste("X",order.stat,sep="")

write.table(hockeysticks,file=file.path(url.source,

"hockeysticks.txt"),sep="\t",quote=FALSE,row.names=FALSE)

The first line sorts the set of PC1s by descending HSI and then copies the first 100 to another array. That array is then sorted by PC1 index so that each PC1 selected for the archive can be retrieved from the appropriate temporary file and saved in ASCII format.

Recall M&M’s description of the “sample” PC1 in figure 1 (Wegman et al 4.1):

The simulations nearly always yielded PC1s with a hockey stick shape, some of which bore a quite remarkable similarity to the actual MBH98 temperature reconstruction – as shown by the example in Figure 1.

That’s “some” PC1, all right. It was carefully selected from the top 100 upward bending PC1s, a mere 1% of all the PC1s.

To confirm these findings, I downloaded the archive “sample” set of PC1s. First I plotted good old # 71, which is can be readily seen is identical to the”sample” PC1 in McIntyre’s original Fig 1 (and Wegman et al 4.1).

And here are the corresponding identical panels from M&M Fig 1 and Wegman et al Fig 4.1:

I then calculated the “Hockey stick index” for each of the 100 and confirmed that they all had HSI greater than 1.9. Also, 12 of the 100 had an HSI above 2, which jibes with the totals given by McIntyre in the comments and the main article (presumably there were another 13 severely downward PC1s with HSI less than -2).

It turns out that #71 is not too shabby. Its HSI is 1.97, which is #23 on the HSI hit parade, out of 10,000.

I then turned to Fig 4.4, which presented 12 more simulation PC1 hockey sticks. Although this figure was not part of the original M&M article, there is a fourth figure generated in the script, featuring a 2×6 display, just like the Wegman figure. A quick perusal of the code shows that these too were read from McIntyre’s special 1% collection, although a different selection of 12 PC1s would be output each time.

hockeysticks<-read.table(file.path(url.source,

"2004GL021750-hockeysticks.txt"),sep="\t",skip=1)

postscript(file.path(url.source,"hockeysticks.eps"),

width = 8, height = 11,

horizontal = FALSE, onefile = FALSE, paper = "special",

family = "Helvetica",bg="white",pointsize=8)

nf <- layout(array(1:12,dim=c(6,2)),heights=c(1.1,rep(1,4),1.1))

...

index<-sample(100,12)

plot(hockeysticks[,index[1]],axes=FALSE,type="l",ylab="",

font.lab=2,ylim=c(-.1,.03))

To confirm this, I set up a dynamic chart in Excel, and scrolled through to find the first PC1 displayed (in the upper left hand corner). Here is the dynamic chart in action, with #35 selected, followed by a close up of the matching top left PC1 in Wegman et al Fig 4.4.

Two more scrolls through and I had identified all 12 (independently corroborated by another correspondent). So here is Fig 4-4, with each “hockey stick” identified by its position within McIntyre’s top 1%, as well as its original identifier (an index between 1 and 10,000).

And as verification, I reran the final part of the M&M script, but with a small change to coerce display of the same 12 PC1 “hockey sticks” as Wegman et al had shown.

### index<-sample(100,12) index = c(35,14,46,100,91,81,49,4,72,54,33)

Here is Wegman et al Fig 4-4 side by side with the resulting reproduction of the 12 hockey stick figure from the M&M script. They are clearly identical (although the Wegman et al version is not as dark for some reason).

|

|

| Wegman et al 4.4 (L) and 12 identified PC1s from the top 1% archive (R). |

|

Naturally, the true provenance of Fig 4-4 sheds a harsh light on Wegman et al’s wildly inaccurate caption:

Figure 4.4: One of the most compelling illustrations that McIntyre and McKitrick have produced is created by feeding red noise [AR(1) with parameter = 0.2] into the MBH algorithm. The AR(1) process is a stationary process meaning that it should not exhibit any long-term trend. The MBH98 algorithm found ‘hockey stick’ trend in each of the independent replications.

As was pointed out long ago by David Ritson (and discussed here recently), this greatly overstates what McIntyre and McKitrick actually demonstrated. To fully understand this point, it is necessary to quickly review the statistical models underpinning paleoclimatological reconstructions. Typically, proxies are considered to contain a climate signal of interest (in this case temperature), combined with noise representing the effects of non-climatic influences. This non-climatic noise is usually modeled with a low-order autoregressive (AR) model, meaning that the noise in a given year is correlated to that in immediately preceding years. Specifically, an AR model of order 1, commonly called “red noise”, specifies that values at time t in the time series be correlated with the immediately preceding values at time t-1. The amount of this auto-correlation is given by the lag-one coefficient parameter.

One of the common uses of AR noise models is to benchmark paleoclimatological reconstructions. In this procedure, random “null” proxy sets are generated and their performance in “reconstructing” temperature for part of the instrumental period is used to establish a threshold for the statistical skill of reconstruction from the real proxies. As estimated through various methods, the lag-one correlation coefficient used to generate the random null proxy sets is usually set between 0.2 and 0.4. (Mann et al 2008 used 0.24 and 0.4).

As we have seen above, M&M employed a similar concept to generate simulated PC1s from random noise proxies. (Confusingly, M&M called their null proxies “pseudo-proxies”, a term previously employed for test proxies generated by adding noise to simulated past temperatures). However, M&M’s null proxies were not generated as AR1 noise as claimed by Wegman et al, but rather by using the full autocorrelation structure of the real proxies (in this case, the 70 series of the 1400 NOAMER tree-ring proxy sub-network).

The description of the method was somewhat obscure (and completely misunderstood by Wegman et al). But we can piece it together by following the code.

if (method2=="arfima")

{Data<-array(rep(NA,N*ncol(tree)), dim=c(N,ncol(tree)));

for (k in 1:ncol(tree)){

Data[,k]<-acf(tree[,k][!is.na(tree[,k])],N)[[1]][1:N]

}#k

} #arfima

...

if (method2=="arfima")

{N<-nrow(tree);

b<-array (rep(NA,N*n), dim=c(N,n) )

for (k in 1:n) {

b[,k]<-hosking.sim(N,Data[,k])

}#k

}#arfima

The ARFIMA notation refers to a more complicated three-part statistical model (the three parts being AutoRegressive, Fractional Integrative and Moving Average). It is a generalization of the ARIMA (autoregressive integrative moving average) model, itself an extension of the familiar ARMA (autoregressive moving average) model. ARFIMA permits the modeling, with just a few parameters, of “long memory” time series exhibiting high “persistence”. The generalized ARFIMA model was presented by J. R. M. Hosking in his 1981 paper, Fractional Differencing (Biometrika, 1981). A subsequent paper, Modeling persistence in hydrological time series using fractional differencing (Water Resources, 1984), outlined a method to derive a particular ARFIMA model from the full autocorrelation function of a time series, and generate a corresponding random synthetic series based on the ARFIMA parameters derived from that autocorrelation structure.

So first, each of the 70 tree-ring proxies used by Mann et al was analyzed for its complete individual auto-correlation structure, using the acf() function. Then within each of the 10,000 simulation runs, the Hosking algorithm (as implemented in the hosking.sim R function) was used to generate a set of 70 random “null” proxies, each one having the same auto-correlation structure, represented by its particular ARFIMA parameters, as its corresponding real proxy.

Naturally, this raises a number of issues that were not addressed by Wegman et al, since the authors completely failed to understand the actual methodology used in the first place. (This abject failure can be explained in part, if not excused, by M&M’s misleading description of their random proxies as consisting of “trendless” or “persistent” red noise, a nomenclature found nowhere else). Foremost among these is McIntyre and McKitrick’s implicit assumption that the “noise” component of the proxies, absent any climatic signal, can be assumed to have the same auto-correlation characteristics as the proxy series themselves. But as von Storch et al (2009) observed:

Note that the fact that such [long-term persistence] processes may have been identified in climatic records does not imply that they may also be able to represent non-climatic noise in proxies.

Even if Wegman et al completely missed the real issues, other critics did not fail to point out problems with the M&M null proxies, as I have discussed before. Ammann and Wahl (Climatic Change, 2007), observed that by using the “full autoregressive structure” of the real proxies, M&M “train their stochastic engine with significant (if not dominant) low frequency climate signal”. And, as we have already seen, David Ritson was aware of the true M&M methodology back in November 2005, and pointed out M&M’s “improper” methodology to Wegman et al within weeks of the Wegman report.

Indeed, the real reasons Wegman et al never released “their” code nor associated information are now perfectly clear. Doing so would have amounted to an admission that the supposed “reproduction” of the M&M results was nothing more than a mechanical rerun of the original script, accompanied by a colossally mistaken interpretation of M&M’s methodology and findings.

In any event, under attack by both Ritson and Ammann and Wahl, Steve McIntyre claimed several times that an extreme biasing effect had been demonstrated by using AR1 noise instead of the dubious ARFIMA null proxies, by both the NRC report and Wegman et al. As late as September 2008, McIntyre proclaimed:

The hosking.sim algorithm uses the entire ACF and people have worried that this method may have incorporated a “signal” into the simulations. It’s not something that bothered Wegman or NAS, since the effect also holds for AR1, but Wahl and Ammann throw it up as a spitball.

However, this claim is also highly misleading. First, as is now clear, McIntyre’s reliance on Wegman et al is speciously circular; Wegman et al mistakenly claimed that it was McIntyre and McKitrick who had demonstrated the “hockey stick” effect with AR1 noise, and certainly provided no evidence of their own.

So that leaves the NRC. It’s true that NRC did provide a demonstration of the bias effect using AR1 noise instead of ARFIMA. But it was necessary to choose a very high lag-one coefficient parameter (0.9) to show the extreme theoretical bias of “short-centered” PCA. Indeed, that high parameter was chosen by the NRC expressly because it represented noise “similar” to McIntyre’s more complex methodology.

To understand what a huge difference the choice of AR1 parameter can make, recall David Ritson’s formula for estimation of persistence (or “decorrelation time”) in AR1 noise, which in turn is based on the exponential decay in correlation of successive terms in the time series. The “decorrelation time” is given by (1 + phi)/(1 – phi).

Using this formula, one can calculate a persistence of 19 years within AR1(.9) noise, as opposed to a mere 1.5 years with the AR1(.2) noise claimed by Wegman et al to produce extreme “hockey stick” PC1s. And, as one might expect, rerunning the NRC code with the lower AR1 0.2 parameter yields dramatically different results.

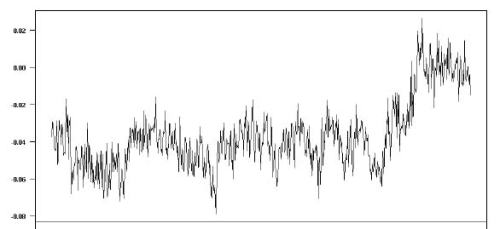

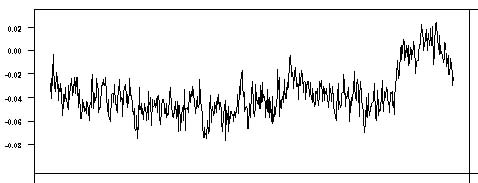

The first chart (at left) is a rerun of the NRC code (available here); the second chart (at right) is the same code but with the AR1 parameter set to 0.2, instead of 0.9, by simply adjusting one line of code:

phi <- 0.2;

|

|

| 5 PC1s generated from AR1(.9) (left) and AR1 (.2) (right) red noise null proxies. | |

So Wegman et al’s “compelling” demonstration is shown to be completely false; the biasing effect of “short-centered” PCA is much less evident when applied to AR1(.2), even when viewing the simulated PC1s in isolation. To show the extreme effect claimed by McIntyre, one must use an unrealistically high AR1 parameter. This is yet one more reason that the NRC’s ultimate finding on the matter, namely that “short-centered” PCA did not “unduly influence” the resulting Mann et al reconstruction, is entirely unsurprising.

In summary, then, I have shown in Wegman et al a misleading focus on one particular step of the Mann et al algorithm, accompanied by a refusal to substantively consider the the peer-reviewed scientific critiques of M&M.

And to top it all, Wegman et al flatly stated that the biasing Mann et al algorithm would produce “hockey stick” reconstructions from low-order, low-autocorrelation red noise, while displaying a set of curves from the top 1% of “hockey sticks” produced from high-persistence random proxies. Those facts are clearly apparent from McIntyre and McKitrick’s source code, as modified and run by Wegman et al themselves.

Make no mistake – this strikes at the heart of Wegman et al’s findings, which held that the writing of Mann et al was “somewhat obscure”, while McIntyre and McKitrick’s “criticisms” were found to be “valid” and their arguments “compelling”. And yet the only “compelling illustration” offered by Wegman et al was the supposed consistent production of “hockey sticks” from low-correlation red noise by the Mann et al algorithm. But the M&M simulation turned out to be based on nothing of the kind, and, to top it all, showed only the top 1% of simulated “hockey stick” PC1s.

So there you have it: Wegman et al’s endorsement of McIntyre and McKitrick’s “compelling” critique rests on abysmal scholarship, characterized by deliberate exclusion of relevant scientific literature, incompetent analysis and a complete lack of due diligence. It’s high time to admit the obvious: the Wegman report should be retracted.

[Update, November 22: According to USA Today’s Dan Vergano, a number of plagiarism experts have confirmed that the Wegman Report was “partly based on material copied from textbooks, Wikipedia and the writings of one of the scientists criticized in the report”. The allegations, first raised here in a series of posts starting in December 2009 (notably here and here), are now being formally addressed by statistician Edward Wegman’s employer, George Mason University. ]

index<-sample(100,12)

Thanks for putting together this comprehensive review.

Nice detective work again, DC. I think it is always a good idea when posting about hockey sticks and Wegman, to remind readers that:

The hockey stick-shape temperature plot that shows modern climate considerably warmer than past climate has been verified by many scientists using different methodologies (PCA, CPS, EIV, isotopic analysis, & direct T measurements). Consider the odds that various international scientists using quite different data and quite different data analysis techniques can all be wrong in the same way. What are the odds that a hockey stick is always the shape of the wrong answer?

Any word on the investigation of Wegman?

Looks like it’s just higher resolution (increase the

widthandheightarguments).That suggests Wegman spent more time understanding the plotting code than he did on the actual analysis.

Thanks for this nice post! Would it be difficult to actually plot 10 random hockeystick PC’s out of the 10000 generated, say the first 10. (or maybe in the sorted list #50,150,250 etc.) I am curious how much “hockey-stick” shape is in there, because I don’t have a clue how to interpret the HSI exactly.

It might be an idea to add an AR1 (.4) plot too…

Sterling work DC.

Your deep digging continues to demonstrate not only just how deep a hole Wegman et al dug, in their turn, for themselves in the production of their ‘report’, it nicely summarises just how spurious the whole fuss about M&M was from the start.

As so many commenters are observing, the chickens are now coming home to roost for those of the Denialati who crowed so loudly and for so long about ‘poor science’. There is surely growing discomfort in more than one contrarian coop.

Thanks for uncovering the “The Wegman Illusion”.

Great work, as always, DC.

From the main essay:

This would appear to be a heck of a cherry pick! A hundred of them, actually.

Wouldn’t cherry picking be covered in an introduction to statistics course 101? I never did I’m sorry to say, but I assume both McIntyre and Wegman would have taken something like that at some point.

The comparison of PCs from AR(.9) and AR(.2) are a little confusing because of the change of scale between the two plots (eg, I could imagine the plots of the left looking not out-of-place if overlayed on the plots on the right).

(Mind you, this was something that I also thought was odd about the Wegman report: they showed these hockey stick PCs with tiny absolute magnitudes from “red noise” and compared them to an order-of-magnitude larger hockey stick PCs from Mann et al., as in Figure 4.1)

-M

Boom. So Wegman not only plagiarized the social network analysis and background text, he also plagiarized the core statistical analysis- the crux of his supposed value add- albeit presumably with the full awareness and tacit (if not explicit) consent of its original authors. I’m reminded of the Cutter character in “Clear and Present Danger”, wherein he watches coolly from Langley as the bomb cam zooms in on the drug lord’s monster truck, throws his feet up on a table and takes a bite of what looks like a baby carrot. “Boom”. Lunar landscape where a work of high scholarship by impartial experts used to be.

While much of this criticism is, as indicated, not new, the part that is (in particular the degree to which Wegman et al was nothing more than a credible edifice for M&M’s disinformation, much as Judy Miller served as credible edifice for Dick Cheney’s Iraqi WMD disinformation), together with the other woeful scholarship and rank plagiarism that yourself and John Mashey have recently exposed means there’s nothing left of the Wegman Report to discredit.

Its every fiber has now been shredded, stomped, disemboweled and otherwise torn asunder. Its authors have been disgraced, and their thorough disrespect for science, truth and their own integrity laid bare for all to see. And of course, the reflection on McIntyre is clear, hence why this whole line of inquiry has driven him to conniption.

Speaking of which, this raises a few questions about ramifications for M&M’s ‘scholarly work’, such as it is. Seems to me that the methodological choices therein border on the fraudulent. What could be their justification for using the full autocorrelation structure of the climate proxies in their validation null proxies? The quality of MBH’s methodology was to be inferred by how strongly the signal it detected in the proxy data differed from the information-less base case. A priori M&M would’ve known their choice would tilt the field of play against MBH.

Since they had no evidence to support their unconventional methodological choice, how is it they are permitted to get away with that? Especially when you consider their novel and presumably undisclosed technique for generating a ‘random sample’ of PCs? Something’s rotten in Denmark if that can be allowed to stand. Isn’t getting a retraction of M&M from the journal that printed the nonsense a more worthy aim than the Wegman report?

milanovic:

Gavin’s Pussycat:

Even a median HSI (about 1.6, I reckon) upward bending simulated PC1 would recognizably be a hockey stick. The point, though, is that the 1% solution is just one more choice that exaggerates the “hockeystick” effect.

Now I clearly recognize that the “short-centered” PCA does promote “hockey stick” patterns to the first PC, and that this is not a proper methodology.

But from the very start, the biasing effect was exaggerated by focusing only on PC1 in the data reduction step, instead of the MBH algorithm end-to-end.

And even within this narrow focus, M&M’s choices greatly exaggerate the “hockey stick” effect, i.e. by omitting the MBH renormalization step and using an inappropriate noise model. The 1% cherrypick is just one more exaggeration.

A completely factored analysis would not only show representative hockey sticks, but also assess the impact on HSI of renormalization (as in the Huybers comment) *and* other noise model choices (perhaps AR1 with parameter 0.2, 0.4 or 0.9).

Maybe I’ll get around to doing that (and improve my R chops in the process), but I must admit even my patience for this nonsense is wearing thin.

So, if I do 10,000 simulations with random data, and keep the top 100 with an upwards bend, what did I demonstrated ?

http://dmining-technology.com/bios.html

Looks like the Wegman and M&M scandal is beginning to look like the Bre-X scandal. Not surprising since both were orchestrated by mining executives. Cherry picking is just as dishonest as salting mining samples.

DC: thanks for this. Your work illustrates further the sloppiness, superficiality and partiality of Wegman’s review and you highlight, once more, McIntyre’s tendency to exaggerate his case. By approving of Wegman’s shoddy work, McIntyre has also lost any credibility that he might once, long ago, have claimed as an auditor.

One query, and forgive me if I have misunderstood something, but your Excel plot of HS35 does not appear to be the same as the one in the top left-hand corner of Fig 4-4. Also, you wrote:

Here is the dynamic chart in action, with #35 selected, followed by a close up of the matching first PC1 in Wegman et al Fig 4.4.

but the close-up graphic seems to be missing.

[DC: Fixed – thanks. I guess the dynamic chart was a little too dynamic when I took the original snapshot. ]

1. Bottom line is that there is a biasing effect of “short centering”. However, MM have consistently shot themselves in the ass by exaggerating the impact of this phenomenon for the PR impact.

To me, this shows a desire for winning games (and silly Internet arguments ones), rather then for true understanding of phenomena. If instead they had clearly shown the phenomena and how it VARIES AS A FUNCTION of incoming noise, then they would have done something of interest. For example if short centering were used in some other problem, even a nonclimatic one where the red noise was more random walk, then what they showed would be significant!

But this sort of curious and objective approach to a phemonena, to look at it, test it and quantify it, was not their approach. Instead, their approach from the start was driven to “gotcha moments”. Pity. Cause it would have been a fun little detective find (like the Graham-Cumming with the uncertainty formula error, or even McI’s Harry or Y2K (minor, interesting, fun corrections!)

And maybe even mathematically interesting to model the phenomena (in case of short centering). But they weren’t really interested in getting arms around what was going on, WHETHER or NOT, quantifying showed the impact minor. This is so bizarre to me. As the natural impulse I have in engineering, science, business, military, etc. is always to try to get a handle on something by quantifiying! Heck a lot of times, I can quickly start to grasp something without knowing all the math or physics, but quantifying how big is it, how does it vary, what makes it vary. This is a way to think about new technical (or even business) problems quickly and to allow you to get inferences from other fiels where you don’t have a BS in them. Heck, even when doing undergrad physics or math or chemistry problems would always look at the end result for size, just as a sanity check for correctness. I just think that is a normal impulse for how a curious analyst approaches things he does not yet understand fully to get his arms around them! So when someone does not want to quantify. When he avoids quick comparisons, my hair stands on end. (and I don’t buy the time excuse either. We are talking very high gain, obvious quantifications, and instead the guy has time for naked cartoons and other shitzandgiggles (for yearz and yearz!)

In a sense, I sympathize with your saying that mapping the full methology space to show how different noise models and other methodology choices (standard deviation dividing) mesh with the short-centering biasing would be a pain to show the whole landscape. I can even feel a little (but less!) sympathy to McI for not mapping the whole thing (although also still surprised at the lack of curiousity to map a landscape.) But that said, even if he did not want to map the whole space and just show that there was a code/logic error in MBH, do it WITHOUT exaggerating the flaw! Heck, even do it with a sort of deference to his “opponent” to not overexaggerate the flaw (if he lacked the time to really map the landscape and just wanted to pick on example). But instead he has clearly taken the one pretty clear “error” and consistently and in many ways (PC1 versus overall recon, Preisendorfer n versus two PCs, overmodeled red noise, top 100!, standard deviation dividing confounding, etblaf*ckingcetera) exaggerated the flaw. Sad, sad, sad!!!

You know finding a logic/math error in a simulation is not unusual. I think every single time I have done a big (30+ sheets of Excel) financial model, there have been errors in it (and I’m not even the jock putting that stuff together, just get involved along the way). So, you should always find and fix errors. But making a big hubbub about something that does not change the answer is silly.

And this is why you quantify. For instance, if you find that you double discounted in your IRR formula, that is a BIG DEAL. Could queer a deal, especially when presenting to non-numerate execs who fasten on IRR, versu NPV. (Of course the difference between an anemica IRR and a juicy NPV should be the clue that you messed up IRR…and that’s why you think and compare and contrast!) On the other hand, being a year off in the revenue projections (but not investments) for an SBU that is 3% of the target portfolio, is just NOT going to change the answer from any kind of “do we pull the trigger, make the investment” point of view. And, yeah, the errors might scare you. Might make you look for more, for other similar ones, have someone else (an independant analyst) check the code. But that’s fine. And heck, it’s normal. It’s life. It’s thinking. It’s ANALYSIS! I really can’t beleive that this habit of mind needs to be explained or defended. Espeically to out of field skeptics!

2. I’m familiar with most of the long, tangled history here, but the “top 100” is a great new gotcha. I had never heard of that. Kudos, little guy! Can you please quantify how much difference it makes on the results to take “top 100” out of the 10,000 versus using some “random 100”?

3. Thank you for showing the difference between a real AR0.2 and claimed WEgman AR0.9. I agree that the biasing artifact is highly senstive to the amount of that. Actually McI had shown some of that in his CA posts (not in the sort of objective non-argumentative manner I would prefer, but with a clear bias to arging larger AR is “true” at the same time, he showed differences, but STILL, he had shown somewhen how different amounts of random walk start to affect things. He had shown some of the “how red are your proxies” dependance. (maybe he didn’t take it all the way through an algorithm, but just showed series themselves). That was one of the things that really set me off, when I saw the WEgman “0.2”. Not only did it not match NRC, it didn’t even seem to match McI blathering! It was just too low!

4. I corresponded with Witcher and Hosking back in the day (and they have no, no, NO interest in this p***ing match). The person who really put together the “full ACF function” was Witcher (or whatever his name was, too lazy to look, but it is in that one CA thread). McI should cite him, not Hosking.

There was a substantial amount of work to go from the Hostkings paper to the Witcher code, and Hosking has definitely not checked it! Also, the 1981 paper is the more appropriate Hosking reference. Steve just gets way too cute with cites and I don’t think he reallly understands the basic idea of how cites are supposed to work, but just picks stuff to be cute (there are other examples showing him not grasping concept).

P.s. cross-posted to AMAC in case you don’t like the earthy remarks (I actually self snipped a lot of them).

Let me add excellent DC. The work that must have gone into the above is amazing. I hope this does not add to your workload much more, but would it make sense to plot some random samples as milanovic suggested above or are there scaling issues?

I had a discussion with Steve McIntyre a couple of years ago on the scaling issue but I also asked about how eigenvalues fit into the topic, i.e. were the eigenvalues from the “noise” PCs smaller than the eigenvalues from the reconstruction. The answer was somewhat evasive.

best,

John

> even my patience for this nonsense is wearing thin

Human after all 🙂

Very illuminating!

Pingback: Anniversario » Ocasapiens - Blog - Repubblica.it

I had a discussion with Steve McIntyre a couple of years ago on the scaling issue but I also asked about how eigenvalues fit into the topic, i.e. were the eigenvalues from the “noise” PCs smaller than the eigenvalues from the reconstruction. The answer was somewhat evasive.

From M&M 2005:

The loadings on the first eigenvalues were inflated by

he MBH98 method. Without the transformation, the median

fraction of explained variance of the PC1 was only 4.1%

(99th percentile – 5.5%). Under the MBH98 transformation,

the median fraction of explained variance from PC1 was

13% (99th percentile –23%), often making the PC1 appear

to be a “dominant” signal, even though the network is only

noise.

So the median eigenvalue for M&M’s “centered” leading PC’s is about ~0.04; the median eigenvalue for the “non-centered” leading PC’s is about ~0.13.

Compare those with Mann’s “hockey-stick” centered/non-centered PCA leading eigenvalues (~.2 and ~.4, respectively).

So even with M&M’s noise with autocorrelation length on the order of half of the time-series length, their eigenvalue spectrum was still much flatter than Mann’s. There’s no way that any competent analyst would have conflated the two cases.

Nice job, DC – I’m impressed. I have always found it strange when McIntyre et al consistently make the leap of logic that a poor choice of methodology, such as Mann’s PCA, is equivalent to a deliberate deception, and the opposite of their findings being true.

Kate

Deep: The biggest issue is Wegman not even knowing the nature of the problem (in terms of a feel for the complexity). Remember Jollife said it would take him a few weeks and code and acess to the principles to aske questions to really weed through MBH and MM to access impact and correctness of the different methodology choices. But Wegman, arguably did a worse job than even McIntyre in terms of looking at the algorithm. and he went after it with himself (probably not a top data analyst at this point in time) along wiht a poor student and then some other dude. He didn’t really even have a feel for what was involved or give it real care and attention. too much trumpeting of the Wegman CV, not enough hard core work. I can forgive a mistake, but not so easily forgive not even making a good effort.

It’s sterling service DC, thanks for your efforts!

As someone who only has a passing familiarity with PCA, can someone explain what the original proxy data actually looks like? I’m used to using PCA in an ecological context with a multivariate dataset where you might have all sorts of different types of data like various species abundances, and you plot PC1 vs PC2 to look at how different communities fall out. But in the case of proxy data, is each input variable one temperature series from one (or one set of) tree rings? And the PCA then tells you which proxies have the best coherence?

Or does each proxy data set have multiple potential temperature predictors, and the PCA is a way of telling you which predictors explain the most temperature variation?

Actually in the case of the infamous Mann papers PCA was used more as a means of data reduction. Each series (a site chronology) consists of multiple trees from a single site (a collection of stands, trees from individual plots) which give a history for a particular area, usually a few km square.

Because North America was so heavily represented in the proxy dataset, Mann choose to use PCA to reduce the number of series so as not to overweight NA in the resulting reconstruction. Because of the differing environmental influences at each site, treating the composite of all NA sites leads to multiple predictors. In the incorrect version of PCA Mann used this led to the promotion of the bristlecone pine series to PC1, when the correct version left them at PC4, explaining only about 5% (IIRC) of the explained variance.

Your second hypothesis is very close to the mark. And someone, please correct me if I am, as is very likely, wrong.

The PCA only tells you which patterns explain most of the proxy variation.

The calibration against temperatures happens at a later step.

This one is pretty much right.

n.b. “coherence” has a particular meaning in statistics, but I’m assuming you meant it in the plain English sense.

Uh, no. Proxies were chosen a priori because they calibrated well against local or regional temperatures (and/or moisture) in previous studies. PCA was performed as the first step (after areal adjustment) on the gridded instrumental data, 1902 – 1995 and the individual proxy series from 1902 – 1980 were calibrated against the corresponding EOFs of the instrumental data matrix by singular value decomposition to determine retention of reconstructed PCs for each proxy series and then tested for robustness against the 1854 – 1902 validation period as well as a smaller subset of instrumental/historical EOFs going back to the 16th century. The movement of PC1 in the calibration period to PC4 in calibration + validation steps is likely due to the fact that it corresponds to the elevated trend in global temperatures being the most significant pattern in the 20th century greatly reduced by inclusion of earlier temporal variance that doesn’t have this positive trend.

LB,

The PCA focused on by M&M is *not* the instrumental temperature processing (by definition that couldn’t be short-centered).

Rather it’s in the dimensionality reduction pre-processing applied (in this case) to the 1400 North American tree-ring network. This generated leading PCs that represented the 70 proxy series. Those PCs were then used along with other (sparser) proxy series in calibration against the instrumental temperature PCs, as you say.

My understanding is that this reduction was to prevent swamping of the other proxies by the denser tree-ring sub-network, and also to preclude overfitting.

I guess on rereading we’re all in violent agreement here. But I don’t see that pete was wrong in his characterization of calibration as a “later step” (i.e. subsequent to the tree-ring sub-network reduction).

Dumb question. what do you get if you simply add all the 10K and all the 0.1 K

The HSI is bimodal — there’s roughly equal numbers of upside-down and rightside-up hockey stick series. “Simply” add them together and they should cancel out for the full 10K.

A quick glance at the R code suggests the 100 hockeysticks stored in the equipment shed are the 1% with the most positive HSI (not largest in absolute value). So add up the 100 and probably a hockeystick with about 1/10th the relative amplitude in the zig-zags.

I presume you don’t mean literally adding, but …

Half the PC1s point down. But orientation is irrelevant really, so the answer is the average PC1 (or average of all the 10 K PC1s if all “flipped” the same way) would have a hockey stick index of about 1.6. That is, on average the calibration period mean is 1.6 stdev above (or below) the overall series mean. The top 100 average 1.96 HSI.

So this is best thought of as one more exaggeration (~20%) on top of all the others. It would be interesting, though, to see the exaggerating effect of displaying the top 1% as opposed to a truly random selection in other processing scenarios (e.g. with Huybers style renormalization, and/or AR1 noise).

And of course a truly random selection would point both up and down. But it’s so bothersome to have to explain why that is not relevant, and then, too, the visual effect would be lacking.

Well actually, being a simple old bunny Eli does mean simply adding. If nothing else this is the simplest (insert word meaning nonsense here) test on what anyone claims to be random data.

Well, then pete had the right answer, although I think the amplitude of the zig-zags of the hockey stick for the 100 added together (or let’s say averaged at each time t) would be more than one-tenth of the individual PC1s. I’ll report back :>)

Actually, on reflection *and* inspection, the squiggles are that small (or even smaller), although there is some centennial undulation as well. The HSI is 2.4, for what it’s worth. The 20th century portion is interesting, too, steep rise to 1930, curved top peaking at 1940, and then noticeable drop off to 1980. So a hockey stick blade, but with an extreme concave bend at the end (like the NRC chart actually).

The noise in each simulated PC1 will be autocorrelated; however there will be very little correlation amongst the separate realisations of the PC1. So averaging them should reduce the noise by square root N.

Note that the HSI is relative to the total variance of the PC1 — averaging the realisations reduces the variance which increases the HSI. If you keep increasing N, you’ll eventually converge to a HSI of 2.9, no matter how large or small the AHS effect is. So taking the HSI of the averaged PC1 is probably meaningless.

DC, doesn’t what you say here sort of make the point? The M&M technique incorporated at least some of the signal. The trends you describe for the 20th century look an awful lot like the temperature record.

Not very good for them in either their “trendless red noise” findings nor their finding of the much higher threshold for statistical significance in the RE measure. In the words of Horatio Algernon they’ve been “emathsculated”.

The curve at the top is a lot more symmetrical than the real record. Also, in the NRC version it arises from AR1(.9) noise which has very high auto-correlation, but no temp signal as such.

“So this is best thought of as one more exaggeration (~20%) on top of all the others. ”

This reminds me: it might help people to have a pair of nice short flow diagrams showing:

a) “right” way to do it v

b) the way MM/WR use, with some idea of “exaggeration” or distortion each step.

For some reason, I think of a UNIX pipeline where each command distorts the calculations along the pipeline.

I always like reading these articles kind of like carving a turkey, a lot of trimming around the edges but never getting to the meat of the matter. AGW has been thoroughly exagerated for quite some time now. I am looking forward to the cancun conference, no major world leaders in attendance, no agreement of any kind another fiasco in the making.

Keep up the good work, meanwhile the rest of the world has moved on to more pressing matters like how to more effectively convert bitumen to Oil.

[DC: Hmmmm, a content free and relevance free post, with ClimateDepot link back (removed of course). I doubt I will bother with this commenter in future. In the mean time, let’s not feed the trolls. ]

He doesn’t know how to carve a turkey, nor how to spell, so I don’t think we’ll be missing much regarding climate science …

Ah something like:

acf | hoskings.sim | sort | uniq | head -2 == 1000% distortion?

I don’t fully understand this, but it looks as if we’re dealing with a case of the metaphorical “thumb on the scales”–which would be an instantiation of scholarship much worse than just “shoddy.”

Great post, DC. Just when I thought nothing could possibly be added to the labyrinthine hockey-stick debate, you manage to pull together much of the argument in a very clear way, and bring something new to it, answering questions I wondered about when I first read MM. Bookmarked!

I can see that in any case it’s odd of MM to compare one of their ‘sample’ PC1s with the full MBH reconstruction (MM fig. 1 / Wegmann fig. 4.1). But

I’m afraid I’m still clueless enough about the methodology that I have to ask: what about their scaled-up hockey stick being an order of magnitude ‘smaller’ than the MBH curve (see the y axis)? Is this relevant to the argument at all?

I’ve been trying to recreate the NCR figure, but without success. I’m a Matlab user and not really familiar with R, so their code isn’t all that helpful to me.

I’ve created AR1(.9) noise for each series using the filter function in Matlab. As far as I can tell, this is an appropriate method (yes?):

filter(1,[1 -.9],randn(Years,1))

I then short-center each series over the calibration period using the MBH method described by Huybers (‘IT’ refers each series):

(Series(:,IT)-mean(Series(Calib,IT)))./std(detrend(Series(Calib,IT)))

I then run the PCA based off of the covariance matrix:

pcacov(cov(NormSeries))

PC scores for each year are then calculated by taking the sum of the products of normalized values and loadings. I’m sure there’s a prettier way, but I’m just running this within loops:

scores(YR,PC) = -sum(NormSeries(YR,:).*coeff(:,PC)’)

I can confirm that these scores match up with those calculated using the princomp function when data are normalized by just subtracting the mean.

The only way that I can get anything resembling a hockeystick is if I manually insert a hockeystick into each series. The NRC AR1(.9) figure is so striking, but I still can’t figure out why simply “short-centering” would produce such an extreme effect from random red noise, even with high autocorrelation. For me, all short-centering does is make it so that the mean PC1 value over the calibration period are equal to zero.

Granted, I did this really quickly (so there could be a stupid mistake), but am I missing something? I still don’t see why short-centering should create such a strong pattern that isn’t found in the original data.

I hesitate to call this a “mistake”, since using the built in methods is usually more sensible than rolling your own.

The

covmethod is going to centreNormSeriesproperly, making your earlier short-centring irrelevant.You’ll have to roll your own “short-centered” PCA, although I’m not sure how Matlab will do that (as I’ve never used Matlab).

In the NRC code the “short-centered” standardization of each generated series is done, then the svd transformation is applied to the resulting matrix of “short-centered” series. (Does that help?)

for (j in 1:p) {

b <- arima.sim(model = list(ar = phi), n);

a[ , j] <- b – mean(b[baseline]);

}

invisible(svd(a)$u[,1]);

I’m not a Matlab user either, but from your code it looks like you only need a roll-your-own version of

cov.X* X / (N-1)should do it, where*is the matrix transpose.Oh, I see. Matlab PcaCov() will work from supplied covariance matrix. So never mind – listen to pete (always a good idea).

I’m not a Matlab user either, but from your code it looks like you only need a roll-your-own version of cov.

X* X / (N-1) should do it, where * is the matrix transpose.

Thanks Pete – it turns out that the cov function in Matlab indeed normalizes the data. I’ve done it as above (which is the same code as within the function – after it normalizes it) and I indeed get a figure that matches up with the NRC.

I ran 1000 iterations for AR1(.9) and AR1(.2) and calculated the HSI values.

For AR1(.2), mean HSI is 0.82 with a std of 0.11. The max was 1.12.

For AR1(.9), mean HSI is 1.94 with a std of 0.11. The max was 2.18

So while 99% of MM’s pseudo proxies show HSI values above 1.0, it’s essentially the opposite with AR1(.2) noise.

Thanks to DC for diving into this so thoroughly and making me curious to try it myself. I certainly learned a thing or two…

So I would hope to see a correction on record for the GRL article?

I’ll second that.

Horatio:

The comment about statistics fits only if you think the Wegman Report was an exemplar of real statistics.

I certainly don’t think that, and in fact over the long term I suspect the people who will be most appalled by this mess are good statisticians.

Horatio’s comment was tongue in cheek.

Horatio: I thought it might, but I emphasize this because I think there is evidence (various places in SSWR) that the Wegman Report and later talks by Wegman and Said were doing what they could to foster a statisticians-vs-climate scientists fight. So, thus is one where if you mean tongue in cheek, it is helpful to say so.

I don’t have a copy handy, but I labeled this the “faux fight” meme, which didn’t work very well, even with McShane and Wyner’s attempt to try again.

I think the “meme fight” can be understood without any sort of coordination or conspiracy. It is common for opposing sides of debates to try to push an angle. And there is even some small validity to the angle. (Overplayed I beleive, but the way fields contribute to each other can lead to these gaps.) You can even go back to Hotelling and read his famous essay (go research it) on the role of a Department of Statistics. It is a deeper thread than just the recent climate issue. Has to do with conflicts of Deparmental Statistics versus non. Math versus Stats. Who should teach intro courses, yada yada. Seriously go read it and also get Rabett’s take. And I am not saying Hotelling is all right or all wrong (although he was a great man). Just read it and think about it, to have some feel for the landscape. Even if you change no opinions, it will give you some flavor for how WEgman sees things. Really man. Hunt down that essay. It is a total classic in the field of statistics. It’s in the big blue Tukey book. (I think.)

The climatologists are not formally statistical enough was being pushed well before Wegman. I agree with it a tiny bit. But I think things will get better. And I like guys like Annan. Mike on the other hand is cocky. And bald. did I mention bald? I could take him in a fight too. 🙂

[DC: Cut the macho stuff, please. Or I can do it for you. Thanks! ]

Poly:

My “faux fight” meme came only after:

a) studying Wegman’s various talks to statistics audiences light on climate expertise

b) Looking at Wegman’s absurd talk to 2007 NCAR, about which I got feedback from 2 attendees, both rather negative.

c) and exchanging numerous emails with serious statisticians, who were in fact dismayed at Wegman, in some cases expressed that to him, but in any case, were ignored.

The axe-grinding went way beyond the usual inter-disciplinary bickering, including specifically the topic of who teaches statistics and how, something I’ve discussed over the years at various schools with professors, department heads and one university president, having given invited (simple) statistics-related lectures at 7 schools, most of which rank in Top 100 on most people’s lists.

People are entitled to opinions, but minimally-informed ones don’t really add much or encourage one to read them. In case people wonder, even given the massive length of SSWR, much was known that was *not* written down, but none of it weakens what was written. Some is worse.

I would welcome a story about these exchanges with serious statisticians.

It would complement well the stories so far told here.

I’m not really disagreeing that it is faux or not. I just think you could have a better feel for the meme if you read Hotelling. Heck you can read it and say Hotelling was wrong. Or that Wegman is misapplying Hotelling. that’s really not my point (right or wrong, per se). I just want you to have a little deeper understanding of the development of the ideas, of the meme background. It’s like you could disagree with (or agree with!) invading Iraq, but a deeper understanding of the evolution of different “neoconservative” ideas historically, would improve your criticsism. Heck, you can even read Hotelling and then tell TCO, it was a dry hole.

http://projecteuclid.org/DPubS/Repository/1.0/Disseminate?view=body&id=pdf_1&handle=euclid.bsmsp/1166219196

http://www.stat.berkeley.edu/~terry/hotelling/HotellingLect1.pdf (see pages 23 on)

P.s. Since I know y’all love these social engineering connections, realize that there is a UNC connection of Wegman and Hotelling. And again, even if Wegman is “wrong” understanding that he will have read and been deeply influenced by this “stats policy” essay of Hotelling will at least give you alittle insight into Wegman’s thought process.

1. Here is a list of links with various discussion of Hotelling’s paper.

http://projecteuclid.org/DPubS?service=UI&version=1.0&verb=Display&handle=euclid.ss/1177013002

click for the different comments and then go to the bottom to get the PDF.

2. Again, I’m not at all trying to defend Wegman (or Hotelling). Just to give you a tiny insight into “where he is coming from”.

3. My (TCO personal view now) thinking is that you have to take Hotelling with a grain of salt. He is a chairman of a Statistics Department defending turf in a sense. One can imagine similar debates as to who should teach fluid dhynamics and heat transfer to chemical engineers (the ChemE profs or MechE?) and etc. There are plusses and minusess of each approach (and Hotelling has not really won his argument, if anything maybe he has sort of lost it). That said, stats is a very easy thing to mess up in terms of logic flaws. And people do all the time (just look at a lot of the medical literature). So along with the (good for the world) increased USE of statistics in applied fields has gone continued theoretical lapses. Mike in a way, with his (opinion) use of new methods without thorough (I mean very thorough and theoretical, not JoC) validation of them, sort of fitst into this pattern. It’s also interesting that Hotelling notes the importance of a statistician in having dug deep into at least one applied field (the issues of data collectiona dn all that, not just up high linear albegra theory). I think in this snese, you can see a lot of the gaps of Weggie and M&W (too cocky by far about not knowing the paleoclimate background and how it affects stats).

Similarly, sort of fittiing into this concern…I was very bothered that Wegman did not FOLLOW UP his initial report with more work in paleoclimate. I mean, he;s got theoretical training, he’s been pulled into this pretty interesting field, and there are probably opportunities to make advances in it (it is “rich”). But there was really nothing progressing from his report. No deeper analyses, no retractions or amendments from grown understanding, no tangents into new problems, no bringing back of things he learned from this work to other (non climate) fields. The lack of such activity worries me that he is really not capable (or interested) in bringing it. heck, really McI (for all his warts and his lack of a Ph.D. in stats) has looked and thought about these concepts more than Weggie. And of course, the real advances are coming from Li and Huybers and Annan and the like. Heck, I would even put M&W (which I remain very critical of, both for results and for cockiness about ability to made advances in the field without really coming up to speed) in front of Wegman at this point.

The Wegman report was described as “independent” and “peer-reviewed” by its Republican sponsors. I plan to do a post on these claims. For now, I’ll note that mechanical “reproduction” of figures produced by archived data using the original study’s R script hardly constitutes “independent verification” (a point made very eloquently by Horatio).

Ah, but then you do not have your auditor merit badge

“and “peer-reviewed” by its Republican sponsors.”

Which, of course, Wegman agreed with at the hearing.

Looking forward to your post, DC. It’s been one of the biggest memes about the Wegman Report.

J. Bowers: you can get a headstart about reviewers:

See SSWR, specifically A.1.

willard: I’m not going to say any more about conversations with statisticians. If you read SSWR carefully you can probably make informed guesses about a fraction of those I might have communicated with. I left out a lot, given:

a) Issues that people were pretty peeved about, but thought were between them and Wegman …

b) Things that people remember telling Wegman, but either verbally or via email they didn’t save. [That kind of stuff would come out in testimony, but I wasn’t going to use it.]

c) Second-hand stuff like “X was really worried about Wegman, for reason Y.” (in fact, X indeed was worried about Y, and was delighted later to be sent a pointer to SSWR when it came out.)

d) Opinions that certainly were consistent, but not really verifiable, and in some cases perhaps not printable.

e) And of course, discussions that happened after SSWR came out.

Unsurprisingly, there is a a fairly tight social network among senior statisticians. They talk to each other also.

Wegman certainly knew the reviewers. But anyone who thinks the reviewers were all just Wegman buddies asked to bless the WR, really needs to read A.1 and rethink that.

This long ago ClimateAudit thread (September 2006, two months after the Wegman Report) is interesting to read now.

We have Steve and bender claiming – over and over – that Wegman replicated M&M for the case of AR1(0.2) noise instead of ARFIMA, a claim we now know is baseless. TCO questions whether this is really the case, since the Wegman results are so similar to M&M. I wonder when McIntyre finally realized that Wegman hadn’t used AR1, but merely thought M&M themselves had. The latest “Wegman used AR1” claim from McIntyre is from 2008, unless someone can find a more recent one.

But TCO also raised another question that I have wondered about.

Looking at the definitions in waveslim, hoskings.sim asks for the “autocovariance sequence” or acvs. It is not clear to me that this is the same thing as the “acf” that R calculates. Not clear if it is the right argument to be passed over.

Then:

I’ve been in touch with 3 big guys in this area of work. Nothing to report yet. Just trying to figure out what Steve did. Exactly.

If the acf is just a scaled version of the acvs, I suppose it would be valid input to the creation ARFIMA synthetic series.

But, TCO, did you get a definitive answer on this?

BTW, I think Hoskings 1984 is the right reference since that’s the one where he described the creation of synthetic ARFIMA series.

Deep: I don’t have much more to report. Yes, I could have pursued it harder, but got really annoyed with the evasions and the NOT WANTING others to understand what was done. I ended up saying eff it. You guys are taking it further than I have.

I would think they should reference Witcher or whatever his name is (not Hoskings). Hoskings did not develop the code even thoughWitcher named it for him and doesn’t really care mich about it or stand behind it. I guess if you wanted to go overboard, you could reference both, but really referencsing Witcher is the key ref, for me. If you are trying to see what was done, to replicate, etc. you really want to go to Witcher not toe Hoskings. Hoskings is actually too far back.

Deepie:

Just in case you didn’t know, there is (I think or I think Steve has said) some sort of a fork in the MM code. So that you can use it eithe in the AR1 method OR in the “full ACF method”. So talking about “what is in the code” is not even sufficient. You have to ask waht was done for each specific figure (or number refered to in text) within a paper or a blog post.

Steve has exploited the existence of this fork rhetorically a lot (when challenged on full ACF, he mentions the AR1 existing, etc.). The bad thing though is tha he did no really report (in sort of the Huyber-Berger full factgorial method, “this is what you get from A, this is what you get from B”. If he had, that would have been interesting. He had some small allusions, but definitely more in a CYA (bury the info in an Enron footnote in the 10K) type manner. Not a clear layout. and definitely evading answers on defiintional questions. (You see the same pattern with not defining terms on the MMH fiasco paper.)

Instead, he always tries to shift and evade disaggregation. Not sure how much of this is rhetorical dishonesty games (the guy actually likes Clinton’s word parsing games) and how much is just him being warped or stupid. Probably some self-satisfying combination of dishonesty and stupidity. I get tired from unraveling the snail though.

I’m aware of the fork. To test AR1 that’s what I’d use. I’d also have to correct the code to generate, store and display a new sample “hockey stick” sample each run. But I digress …

I don’t see any evidence that M&M or Wegman et al or anyone else actually used the fork. But for sure M&M should have reported AR1 at different levels.

As for Wegman et al, Fig 4-2 would have been very different with AR1(.2) (although Fig 4-4 was – mistakenly – hardcoded). And they kept saying that M&M used conventional “red noise”. If they had used the fork, wouldn’t they have explicitly said “M&M used ARFIMA noise, but we show the same effect with the AR1 option M&M provided”?

I’m pretty sure Wegman’s team must have modified McI’s code, if only to get a higher resolution of Fig 4-4.

But even with

method2="arima", McI’s code uses heterogeneous AR(1) coefficients. Does anyone know where 0.2 came from?So that sounds like “empirical” AR1, based on fitting AR1 to each of the 70 real proxy series.

Anyway, my best guess is that Wegman et al misread M&M. There is a reference in M&M to AR1(.2) as being used for benchmarking by paleoclimatologists. Perhaps the repeated misleading reference to red noise led Wegman et al to confuse the two methodologies.

Good. Figured you had to know the fork, but just triple checking.

I pretty much agree that it seems others were not really clear on the forking (e.g. Wegman) and I think McI’s confused presentation of things, rather than a clear “this does this, that does that” type of discussion is a lot the reason for it. So in a sense that helps explain others not seeing some of these issues. You really have to parse hard and do a lot of double-checking, to make sure not to get caught up in McI’s snares (and I’m sure he’ll cite some throway remakrs in EE05 or the like, but those are the CYA footnotes, not the clear layout).

Only other place you might look for an issue is the Huybers comment. I remember asking him which fork he used and never got an answer (and did not pursue further). So there might be some possibility that Huyber’s used the AR1 (in other words supports McI’s point that the effect had been shown). Or that Hubers used a mistaken fork. (No accusation and Hubers is a crackerjack brain, just another string in the web, and another place to check for an issue.) And it would not change the fundamental “catches” of the Huybers comment. Just in the back of my head is another place for checking stuff.

Also, moving past that ancient history, there is also the issue of INTERACTIONS of method choices (e.g. Huybers catch on the standrard deviaiton dividing interacting with the type of noise). You have mentioned this interaction possiblility before, so not an aha to you. (Kudos, btw.) In terms of thinking about it, I actually think some model of a full factorial is more the way to think of things than the Mashey linear summation. since there can be interactions, it’s not really linear anyhow. Plus it allows understanding the landscape independent of arguments about which choices are right/wrong. and allows picking different blends based on opinions (for instance the Huybers issue).

In any case, looking at that whole landscape, and for most combinations in the full factorial will (TCO assertion) lead to a view that Mannian short-centering DOES BIAS PC1 and that McI DOES EXAGGERATE how much it biases! 😉

Thanks, that’s what I was looking for.

I’d put “empirical AR1” in the same box as “persistent trendless red noise” — weird McI terminology that should be replaced with something more informative.

Touche. How about so-called “empirical AR1”? (I think the term actually comes from McIntyre acolytes McShane and Wyner; I don’t know what McIntyre calls it).

Poly…

“Mashey linear summation” ???

I think you have me confused with Rabetts, or someone.

OK I looked it up:

Thus, the ACVS differs from ACS in that the mean µ has been removed from the data sequence. In other words, ACVS is equivalent to its ACS if the mean of the data sequence is zero. For continuously sampled processes, the ACS and ACVS are known as the autocorrelation function (ACF) and autocovariance function (ACVF) respectively.

So this will work fine, as long as each input series to acf() has mean 0. Or if the acf() call specifies return of the ACVF, not the ACF. If it doesn’t …? Pete, any thoughts?

(BTW, it does look to me that acf() is called with the default type parameter i.e. type is “correlation”, not “covariance”. And the input proxies appear not to have zero mean. So this may be yet another issue).

I’d say that using

hosking.simis a mistake regardless of whether you use the acf or the acvf.I’m speculating here, but I suspect it makes no difference if you’re using correlation PCA, but some difference if you’re using covariance PCA.

As you noted, you could add

type="covariance"to McI’s code to test this.I think I was right the first time. Most authorities give ACF/ACS as just the ACVS/ACVF scaled by the variance. This is certainly in line with R acf() and with the normal definitions of covariance and correlation. So this doesn’t appear to be an issue after all.

That doesn’t legitimize the procedure, of course – just that it does appear to be implemented as intended.

Pete, you say:

I’d say that using hosking.sim is a mistake regardless of whether you use the acf or the acvf.

Do you simply mean that this approach to generating “null” proxies is conceptually flawed and produces highly misleading results? Of course, I would agree with that. Or are there any other issues?

Yep.

Well, McI was still a jerk for not answering the question.

That’s “some” PC1, all right. It was carefully selected from the top 100 upward bending PC1s, a mere 1% of all the PC1s.

That is what I have been wondering about. The size of the sample that Mann used to get his temperature records from, and the size of the sample M&M used to get their ‘hockey sticks’ from. If M&M have to trawl through a many more to get their samples, aren’t they misrepresenting Mann’s process?

Pingback: Post your climate change humour here - Page 14 - TheEnvironmentSite.org Environment Forum

Uh, I’m not very conversant in statistics nor in climatology, but the last part of the hockey stick curve (contemporary times) is the best known, the best proven, because it’s based on actual thermometers reading, not proxies.

So, stating that randomly generated samples sometimes show upwards curves at the end, it utterly irrelevant to the subject.

Maybe M&M wants to argue that the MWP bump, as shown thru proxies, is a statistical abrerration with no real-world significance ?

Somehow, I don’t think that is Wegman/M&M intent.

up high linear albegra theory?

Weggie?

Does anybody take this person seriously anymore?

Maybe that’s what Wegman used to get the higher resolution in Fig 4.4: Top Secret Naval plotting code.

Just imagine if it fell into the wrong hands…

I’ve had a little bit of trouble following this. So Wegman essentially just used MM03/MM05 code for his figures in his work and then it was cited as independent verification of MM’s work?

Another question. Besides the short-centered PCA, what other “serious” issues were there with Mann et al. 1998/1999 that turned out to be valid?

In going through the code were there any very serious issues with MM?

“what other”? “what other”?

You’re asking for a re-summary of a lot of debate.

MBH had several comments on it, a corrigendum, and a bunch of McI posts. MM for it’s sake had four comments (two published) and then additional (as in this blog’s comments) features found wrong with it. And of course, there remains a lot of disagreement on what is wrong and not wrong.

And then we get into the whole issue of right/wrong being confounded with large/small (impact).

You’re asking for a lot, dude. A lot of repititon and going back to square one. Are you trying to get Deepie to do your 750 word Curry essay for you?

I don’t think there were any besides the Corrigendum. Of course there were lots in the peanut gallery debate, but nothing further scientific as I remember.

And the short centering was, arguably, a “serious” issue only in the sense that it wasn’t clearly pointed out in the original paper. It had no real impact. So, you see I disagree with TCO.

Clear Science,

Answering you would be easier if we had a tool like this for climatic exchanges:

http://mathoverflow.net/

Since we only have blogs, everything gets rinsed an repeated way too much.

Regarding NSWC:

1) David Marchette and Jeffrey Solka were previous students.

2) John R. Rigsby III was a current student, in that he’d finished his MS in 2005, but was (I think) working on PhD (part-time, most likely).

I’ve visited NSWC in the 1990s. At least then, its missions did not include paleoclimate work or using bad social network analysis to attack peer review in paleoclimate. I am becoming increasingly interested in learning if any Federal funds or facilities were used for NSWC employees to help out with Wegman Report, or if the work was done on spare time.

One must wonder. As I noted in SSWR, there really wasn’t any useful new statistical analysis, but let’s look at the various figures to see what could have been generated by code needing release approval:

Figs 4.1-4.4: DC has shown to have used McInytre’s code.

Fig 4.5 is the distorted version of the infamous 1990 IPCC FAR.

(Maybe NSWC has a graph digitization and distortion program?)

Fig 4.6-4.7 show version of Fig 4.5 overlaid with noise.

(Maybe that’s the NSWC code??)

Figs 5.2-5.7 (social networks graphs) were produced, presumably by Rigsby, but (I think) using Pajek. (The blockmodels show up in the book, and Google images: pajek shows lot of charts of the same sort as in WR.)