Over at ClimateAudit and WUWT they’ve broken out the champagne and are celebrating (once again) the demise, nay, the shattering into 1209 tiny splinters, of the Mann et al “hockey stick” graph, both the 1998 and 2008 editions. The occasion of all the rejoicing is a new paper by statisticians Blakely McShane and Abraham Wyner, entitled A Statistical Analysis of Multiple Temperature Proxies: Are Reconstructions of Surface Temperatures Over the Last 1000 Years Reliable? [PDF]. The paper, in press at the Annals of Applied Statistics, purports to demonstrate that randomly generated proxies of various kinds can produce temperature “reconstructions” that perform on validation tests as well as, or even better than, the actual proxies.

My discussion of McShane and Wyner is divided into two parts. First, I’ll look at the opening background sections. Here we’ll see that the authors have framed the issue in surprisingly political terms, citing a number of popular references not normally found in serious peer-reviewed literature. Similarly, the review of the “scientific literature” relies inordinately on grey literature such as Steve McIntyre and Ross McKitrick’s two Environment and Energy articles and the (non peer-reviewed) Wegman report. Even worse, that review contains numerous substantive errors, some of which appear to have been introduced by a failure to consult cited sources directly, notably in a discussion of a key quote from Edward Wegman himself.

With regard to the technical analysis, I have assumed that McShane and Wyner’s applications of statistical tests and calculations are sound. However, here too, there are numerous problems. The authors’ analysis of the performance of various randomly generated “pseudo proxies” is based on several questionable methodological choices. Not only that, but a close examination of the results shows clear contradictions with the findings in the key reconstruction studies cited. Yet the authors have not even mentioned these contradictions, let alone explained them.

In a late breaking development, Blakeley McShane’s website advises that the paper has been accepted, but that the draft available at the Annals of Applied Statistics (AOAS) is not final. However, the available document does acknowledge the input of two anonymous reviewers as well as that of the AOAS editor Michael Stein (whose purview includes “physical science, computation, engineering, and the environment”). Thus, we can expect the final version to be reasonably close, but not identical, to the one available at the AOAS website. With those caveats in mind, let’s take a closer look.

The AOAS guidelines call for authors to introduce their topic in “as non-technical a manner as possible”. After a brief introduction of paleoclimatology concepts, McShane and Wyner have taken this directive to heart and framed the issue in terms not unlike those found on libertarian websites:

On the other hand, the effort of world governments to pass legislation to cut carbon to pre-industrial levels cannot proceed without the consent of the governed and historical reconstructions from paleoclimatological models have indeed proven persuasive and effective at winning the hearts and minds of the populace. … [G]raphs like those in Figures 1, 2, and 3 are featured prominently not only in official documents like the IPCC report but also in widely viewed television programs (BBC, September 14, 2008), in film (Gore, 2006), and in museum expositions (Rothstein, October 17,2008), alarming both the populace and policy makers.

After a passing reference to three Wall Street Journal accounts of “climategate”, McShane and Wyner start in on the history of the controversy “as it as it unfolded in the academic and scientific literature.” With a backing citation of all three McIntyre and McKitrick papers (an obvious warning sign), the authors state categorically:

M&M observed that the original Mann et al. (1998) study … used only one principal component of the proxy record.

This is nonsense – the famous PC1 was the leading principal component of one proxy sub-network (North American tree rings) for one period of time (the 1400 step that represents the start of the original MBH98 reconstruction). And even for that sub-network, two PCs were used, not one.

Then we learn that this single principal component was a result of a “skew”-centred principal component analysis that “guarantees the shape” of the reconstruction. McShane and Wyner continue:

M&M made a further contribution by applying the Mann et al. (1998) reconstruction methodology to principal components computed in the standard fashion. The resulting reconstruction showed a rise in temperature in the medieval period, thus eliminating the hockey stick shape.

In fact, the alternative reconstruction from M&M started in 1400 and showed a clearly spurious spike in that century (normally considered well after the medieval period).

Mann and his colleagues vigorously responded to M&M to justify the hockey stick (Mann et al., 2004). They argued that one should not limit oneself to a single principal component as in Mann et al. (1998), but, rather, one should select the number of retained principal components through crossvalidation on two blocks of heldout instrumental temperature records (i.e., the first fifty years of the instrumental period and the last fifty years). When this procedure is followed, four principal components are retained, and the hockey stick re-emerges even when the PCs are calculated in the standard fashion.

Mann et al (2004) is the Corrigendum to MBH98 which fixed some data listing errors (without affecting the actual data or findings). But there was no reference to a changed PCA methodology; there could not have been as the Corrigendum was issued in March 2004, while the differing centering conventions were only identified much later that year! But there was a further explanation of the original PCA methodology, whereby the number of PCs retained for each proxy sub-network at each “step” interval was based on objective criteria combining “modified Preissendorfer Rule N and screen test”. (In fact, Mann’s methodology involved rebuilding the network with fewer and fewer proxies as one goes back, requiring recomputation of PCA for each large sub-network at each interval).

The account then skips ahead to the Barton-Whitfield investigation of Mann and his co-authors, followed by the Wegman report:

Their Congressional report (Wegman et al., 2006) confirmed M&M’s finding regarding skew-centered principal components (this finding was yet again confirmed by the National Research Council (NRC, 2006)).

Actually, the NRC report preceded Wegman by three months and was much more comprehensive, rendering the Wegman report superfluous.

But the biggest shocker is Wegman’s supposed excoriation of Mann et al 2004 for “adding principal components” after the “spurious results” of the previous de-centered method was revealed. In support of this assertion, the authors quote from Wegman’s supplementary congressional testimony:

In the MBH original, the hockey stick emerged in PC1 from the bristlecone/foxtail pines. If one centers the data properly the hockey stick does not emerge until PC4. Thus, a substantial change in strategy is required in the MBH reconstruction in order to achieve the hockey stick, a strategy which was specifically eschewed in MBH…a cardinal rule of statistical inference is that the method of analysis must be decided before looking at the data. The rules and strategy of analysis cannot be changed in order to obtain the desired result. Such a strategy carries no statistical integrity and cannot be used as a basis for drawing sound inferential conclusions.

If I’ve learned one thing in following McIntyre and his acolyte auditors, it’s to always check the ellipsis. Space does not permit showing the full passage from Wegman’s testimony (given in reply to written supplementary question from Rep. Bart Stupak). But even a little of the omitted text is very revealing:

… in the MBH original, the hockey stick emerged in PC1 from the bristlecone/foxtail pines. If one centers the data properly the hockey stick does not emerge until PC4. Thus, a substantial change in strategy is required in the MBH reconstruction in order to achieve the hockey stick, a strategy which was specifically eschewed in MBH. In Wahl and Ammann’s own words, the centering does significantly affect the results.

[Question omitted]

Ans: Yes, we were aware of the Wahl and Ammann simulation… Wahl and Ammann reject this criticism of MM based on the fact that if one adds enough principal components back into the proxy, one obtains the hockey stick shape again. This is precisely the point of contention. It is a point we made in our testimony and that Wahl and Ammann make as well. A cardinal rule of statistical inference is that the method of analysis must be decided before looking at the data. The rules and strategy of analysis cannot be changed in order to obtain the desired result. Such a strategy carries no statistical integrity and cannot be used as a basis for drawing sound inferential conclusions.

So this passage has nothing whatsoever to do with the Mann corrigendum, but rather is a discussion of a subsequent paper by Wahl and Ammann. At the same time it reveals some pretty shocking sleight-of-hand by Wegman himself, even leaving aside the snarky dismissal of a paper as “unpublished” when in fact it was peer-reviewed and had been in press for for almost four months at the time of Wegman’s response.

[Update, Sept. 17: As I note in a comment below, McShane and Wyner likely confused the Mann et al reply to McIntyre and McKitrick’s 2004 Nature comment (neither of which were ever published), with the earlier Mann et al 2004 Corrigendum. This is further evidence that McShane and Wyner did not consult the original sources, but relied on poorly misunderstood material from ClimateAudit.]

Here Wegman is attempting to claim that Wahl and Ammann acknowledge that the differing numbers of principal components is itself a “change in strategy”. But this is a gross misrepresentation of Wahl and Ammann’s point, which was that an objective criterion is required to determine the number of PCs to be retained and that number will vary from sub-network and period, as well as centering convention. M&M arbitrarily selected only two because that’s what Mann had done at that particular step and network. They failed to implement Mann’s criterion (as noted previously), or indeed any criterion, and thus produced a deeply flawed reconstruction.

Wahl and Ammann demonstrated that when an objective and reasonable criterion for PC retention is used, a validated reconstruction very similar to the original results. That Mann used such a criterion in the original MBH98 is obvious from an examination of the various PC networks engendered from the NOAMER tree-ring network, as the number of retained PCs varied greatly.

Wahl and Ammann also pointed out differing number of PCs should be retained even if centred PCA were used; if the proxies were standardized, Wahl and Amann’s simple objective criterion would still call for two PCs, rather than five.

None of this should be construed as an endorsement of Mann’s original “decentered” PCA, which was criticized by statisticians. But it does demonstrate that its effect on the final reconstruction was minimal.

I’ll return to the exclusion of Wahl and Ammann from consideration in the Wegman report another time. For now, I’ll merely note that the situation was the reverse of that claimed by Wegman, and by extension McShane and Wyner; clearly, it was M&M who had no “strategy” for retention of PCs.

I’ll turn now to undoubtedly the most controversial part of the paper, namely the analysis of “null” randomly generated pseudo-proxies used to assess the significance of reconstruction. From the abstract:

In this paper, we assess the reliability of such reconstructions and their statistical significance against various null models. We find that the proxies do not predict temperature significantly better than random series generated independently of temperature.

This section, like the subsequent reconstruction of section 4, is based on the same set of 1209 proxies as the landmark Mann et al 2008 PNAS study. That study used two methodolgies that should be kept in mind:

- CPS (composite-plus-scale), based on regression of screened proxies against local grid-cell instrumental temperature.

- EIV (Errors-in-Variables), based on the same set of proxies (screened or not) and temperature series, but taking into account wider spatio-temporal correlations (so-called “tele-connections”). EIV is a variation on the RegEm methodology and can be likened to a PCA approach.

As McShane and Wyner explain, temperature reconstructions are typically evaluated by checking a “held back” window within the instrumental-proxy overlap period, which in the case of Mann et al was from 1850-1995. In Mann et al 2008, two mini-reconstruction are performed, an “early window” and a “late window”. For example, the “early window” validation attempts to “reconstruct” temperature from 1850-1895, based on a calibration of proxies to temperature from 1896-1995.

The fidelity of the “window” reconstruction, as measured by RE (reduction of error), is compared to the corresponding reconstruction obtained using a “null” set of proxies. In the case of Mann et al, the “null” proxy sets consisted of randomly-generated AR1 “red noise” series with an autocorrelation factor of 0.4.

Of course, there is only one real proxy set, but many “null” proxy sets so as to create a distribution of RE statistics, permitting the setting of 95% or 90% significance level. (The details are not so important, as long as one keeps in mind the basic idea of comparing a real-proxy reconstruction, to an ensemble created from random “null” proxies).

McSahne and Wyner set out to evaluate the performance of the Mann et al 2008 proxy set against a number of different “null” proxy types. In doing so, they employed a similar “validation window”, but with a number of methodological differences:

- The simpler Lasso L1 multivariate methodology was used, instead of the Mann et al screening/reconstruction methodologies.

- The 46-year validation window was shortened to 30 years.

- The instrumental calibration period was expanded to end in 1998.

- A sliding interpolated series of windows was used, instead of the two fixed “early” and “late” windows (which then become the two extreme points of a range of verification windows).

- Finally, the hemispheric average temperature series was used for calibration, rather than the gridded temperature.

Each of these is worth discussing, but first let’s look at the results. The following chart shows the spread of the performance of the real proxies and the “null” proxies over the range of validation windows. Figure 9 shows the RMSE (root mean square error) for each proxy type. (By the way, the NRC Report Box 9.1 is a good summary of the RMSE and RE measures discussed here).

According to this chart, none of the “null” proxies are significantly worse than the real proxies (lower RMSE representing a better fit in the verification window). And some even perform better over all (for example AR1 Empirical).

According to this chart, none of the “null” proxies are significantly worse than the real proxies (lower RMSE representing a better fit in the verification window). And some even perform better over all (for example AR1 Empirical).

Let’s look a little more closely at the AR 1(.4) “null” proxy, which was also used in Mann et al 2008.

Earlier, the use of the first and last blocks had been of characterized as open to “data snooping” abuse:

A final serious problem with validating on only the front and back blocks is that the extreme characteristics of these blocks are widely known; it can only be speculated as to what extent the collection, scaling, and processing of the proxy data as well as modeling choices have been affected by this knowledge.

Now, McShane and Wyner make much of the fact that the real proxy set (in red) only performs better than AR1 (in black) in some early and late blocks.

Hence, the proxies perform statistically significantly better on very few holdout blocks, particularly those near the beginning of the series and those near the end. This is a curious fact because the ”front” holdout block and the ”back” holdout block are the only two which climate scientists use to validate their models. Insofar as this front and back performance is anomalous, they may be overconfident in their results.

But not so fast. The proper comparison is really with the very first and very last blocks – the ones actually used in climate studies. And those two blocks tell a very different story.

In fact, the very first block (“early window”) and the very last block (“late window”) actually show almost no difference between the two, with Mann et al’s real proxy data set firmly in the middle of the distribution of the “null” AR1 proxy performance spread. The fact that nearby “interpolated” windows show better performance is irrelevant, as these are not actually used in real climate studies.

In fact, the very first block (“early window”) and the very last block (“late window”) actually show almost no difference between the two, with Mann et al’s real proxy data set firmly in the middle of the distribution of the “null” AR1 proxy performance spread. The fact that nearby “interpolated” windows show better performance is irrelevant, as these are not actually used in real climate studies.

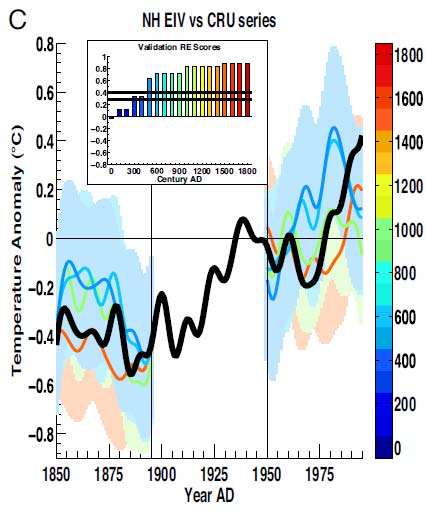

So that begs the question – what was the performance actually shown for these two verification windows by Mann et al? After all, exactly the same real proxies and “null” proxy type were used. Here is Fig. 1C:

Both the early and late validation windows look very good, at least when based on the full proxy set (plotted in red)!

Both the early and late validation windows look very good, at least when based on the full proxy set (plotted in red)!

Let’s take a close up view of the RE (reduction-of-error) statistics (in general, lower RMSE results in higher RE):

At almost 0.9, the RE score is well above the 95% significance level, which is only 0.4 for the “null” proxies. Recall the definition of RE (courtesy of the NRC):

At almost 0.9, the RE score is well above the 95% significance level, which is only 0.4 for the “null” proxies. Recall the definition of RE (courtesy of the NRC):

where

is the mean squared error of using the sample average temperature over the calibration period (a constant,

) to predict temperatures during the period of interest …

For some reason, McShane and Wyner did not report the widely used RE statistic. But clearly, it is very unlikely that such similar RMSE scores could result in such widely divergent RE scores.

So one should look elsewhere for an explanation of this stunningly wide discrepancy. Perhaps the Lasso methodology results in inappropriate screening or overfitting. And the thirty year window might also help the cause of the “null” proxies. Indeed, it is curious that this window has been shortened, given the authors’ complaints about already short verification periods.

Whatever the reason, it should be crystal clear that McShane and Wyner’s simulation has failed to capture the actual behaviour of the study that inspired so much of their work. And the failure to actually cite the comparable results from Mann et al is puzzling indeed.

Now, I’ll turn to the “empirical AR1” proxy, claimed to outperform the real proxies. Ammann and Wahl 2007 noted that this proxy is problematic:

To generate ”random” noise series, MM05c apply the full autoregressive structure of the real world proxy series. In this way, they in fact train their stochastic engine with significant (if not dominant) low frequency climate signal rather than purely non-climatic noise and its persistence.

McShane and Wyner attempt to rebut this, in a way that some may find unconvincing:

The proxy record has to be evaluated in terms of its innate ability to reconstruct historical temperatures (i.e., as opposed to its ability to ”mimic” the local time dependence structure of the temperature series). Ammann and Wahl (2007) wrongly attribute reconstructive skill to the proxy record which is in fact attributable to the temperature record itself.

However, Ammann and Wahl also state that, despite these problems, the real proxies hold up quite well:

Furthermore, the MM05c proxy-based threshold analysis only evaluates the verification-period RE scores, ignoring the associated calibration-period performance. However, any successful real-world verification should always be based on the presumption that the associated calibration has been meaningful as well (in this context defined as RE >0), and that the verification skill is generally not greatly larger than the skill in calibration. When the verification threshold analysis is modified to include this real-world screening and generalized to include all proxies in each of the MBH reconstruction segments – even under the overly-conservative conditions discussed above – previous MBH/WA results can still be regarded as valid, contrary to MM05c. Ten of the eleven MBH98 reconstruction segments emulated in WA are significant above the 95% level (probability of Type I error below 5%) when using a conservative minimum calibration/verification RE ratio of 0.75, i.e. accepting poorer relative calibration performance than the lowest seen in the WA reconstructions (0.82 for the MBH 1400-network).

Again, this real-world result flies in the face of McShane and Wyner’s findings. And the omission of any reference to the contradictory results in Ammann and Wahl, let alone rebuttal thereof, is especially curious, as the above paragraph follows immediately after the passage discussed in detail by McShane and Wyner.

Frankly, after this exhaustive (and exhausting) examination of Section 3, I’m sure readers will understand (and even be grateful) if I do not enter a detailed discussion of Section 4, which contains an actual temperature reconstruction. I’ll simply note that the McShane and Wyner millenial reconstruction has a pronounced hockey stick shape, albeit with a higher Medieval Warm Period and wider error bars than the norm seen in various spaghetti graphs (apparently attributable to the Bayesian “path” approach). Here too, there are several questionable methodological choices, including a simplistic principal component approach that is almost certainly overfitting (for example, 10 principal components to represent 90 plus proxies seems excessive on its face). [Update, Sept. 17: It should also be noted that McShane and Wyner have not implemented an objective criterion for the retention/selection of principal components, the same mistake made by McIntyre and McKitrick back in 2003, as noted above.]

So there you have it. McShane and Wyner’s background exposition of the scientific history of the “hockey stick” relies excessively on “grey” literature and is replete with errors, some of which appear to be have been introduced through a misreading of secondary sources, without direct consultation of the cited sources. And the authors’ claims concerning the performance of “null” proxies are clearly contradicted by findings in two key studies cited at length, Mann et al 2008 and Ammann and Wahl 2007.These contradictions are not even mentioned, let alone explained, by the authors.

In short, this is a deeply flawed study and if it were to be published as anything resembling the draft I have examined, that would certainly raise troubling questions about the peer review process at the Annals of Applied Statistics.

==========================

Reference list to come.

Nice work in such a short time!

Here is another post that I liked:

[DC: That appears to be relatively content-free.]

Grate work!

I might be in minority however I think it would be an interesting read if you did section 4 as well!

> always check the ellipsis

Wow. If the authors or editors are reading this, the typical rule is:

“Use a period followed by ellipsis points to indicate the omission of the last part of the quoted sentence or the first part of the next sentence. Use a period followed by ellipsis points only after a grammatically complete sentence. Do not change lowercase letters to capitals or vice versa in the middle of quoted material.”

http://www1.umn.edu/urelate/style/punctuation.html#Anchor-ELLIPSIS-17304

Why this nitpickiness? Because otherwise authors can get away with doing what these folks did, running unrelated sentences together to make a new sentence that doesn’t exist in the cited source.

Tsk.

Here is a non-paywalled link to Wahl and Ammann.

Shouldn’t your ref to Mann et al 2008 Figure 1c be to Figure 2c? It’s the green curve you need to look at for comparison.

Yes, their RMSE values vs. Mann’s RE looks weird…

Can you explain why the proper comparison is with the very first and very last periods of the temperature record? Shouldn’t the proxies follow temperature equally over the whole period?

Have I got it right that the basic point you are making is that your faith in the hockey stick is unaffected by the finding that taking 30 year sections of the Mann 08 proxies and matching them to the temperature record gives a worse correlation than random noise matched with the temperature record?

[DC: Their results are in direct conflict with the real Mann et al reconstruction validation, which uses only the first and last windows. McShane and Wyner show terrible performance in those same windows, so none of their results are reliable. ]

Note that if we treat the calibration mean and validation series as constants, then RE is simply a linear transformation of MSE, and therefore a monotonic transformation of RMSE.

So if RE is significant then so is RMSE, and visa versa.

The wider error bars are due to taking annual errors instead of much smaller 40-year errors. This is a particularly embarrassing mistake for statisticians to have made.

pete, I am not convinced that what you are saying is right. Yes they report the uncertainty bounds annually, but they are computed from a bundle of simulation curves computed by running a degree-two autoregressive filter in time, driven by the proxy PCs. This produces a smoothing effect depending on the filter coefficients beta-11 and beta-12.

From looking at the graph I would guess that the smoothing period is a few years — certainly not more than ten. This agrees with what Mann found for the autocorrelation.

But yes, this is the reason why spaghetti graph error bars are smaller, there I agree with you.

One can also notice that the millenial back-cast only uses the 93 proxies which go all the way back. Hence, the uncertainty of the later parts of the reconstruction could be reduced by using more proxies.

Nitpick – last word is meant to be ‘Statistics’?

[DC: Thanks for reading to the end – fixed now.]

Thanks for this. Very interesting.

Just to alert you – your final sentence ends “Annals of Applied Science” that should be “Annals of Applied Statistics”.

So you spend half of this post to refute their interpretation historical events not related to the actual study?

You stated “But not so fast. The proper comparison is really with the very first and very last blocks – the ones actually used in climate studies. And those two blocks tell a very different story.” Isn’t that what they said? In as simple terms as I can……This is a time/temp series. The fact that 2 separate time block preformed well and all other didn’t doesn’t validate anything. In fact it calls into question the entire proxy series. This is much akin to looking at a clock and noting that it is correct twice a day, yet incorrect much of the other parts of the day but because it is correct twice a day the clock is correct. It doesn’t work that way.

Frankly, after reading your response to M&W, I agree, there are questions that probably need answered before stating the paper is valid. But you show little proof behind your conclusion statement. Probably because you spent half your time disputing irrelevant sequences of events. The history of the hockey stick isn’t relevant to the study itself, but rather relevant to the impetus. Which in the end, who really cares why they choose to write a paper on the reliability of proxy data? <—— Remember that? That was the purpose of the study, not historical sequences of a graph debate. Which, btw, I'll have to check but I don't believe you have it proper either.

In another part of your conclusion, you state, “So there you have it. McShane and Wyner’s background exposition of the scientific history of the “hockey stick” relies excessively on “grey” literature…”

Reading the paper, “We are not the first to observe this effect. It was shown, in McIntyre and McKitrick (2005a,c),” and “This approach is similar to that of McIntyre and McKitrick (2005a,c) who use the full empirical autocorrelation function to generate trend-less pseudo-proxies.” Those are the only 2 references in the actual study(unlike you, I don’t include the introduction as part of the study) to McIntyre and McKitrick and it is quite obvious they didn’t use them in the study but only cite observations that are similar. Wegman is cited once in the conclusions to verify their statement, “While the literature is large, there has been very little collaboration with university level, professional statisticians.” I suppose you could disagree with that statement, perhaps you and I can get a grant and study whether that statement is true of false and then submit our conclusion to a climate journal and get peer-reviewed so we could know if in fact climatologists consult with statisticians or not.(Because apparently, only selected climate journals are arbiters of truth.) That being said, I’ve gotta ask, what grey literature are M&W relying upon? Reading your critique, you state it is a flawed paper, but mostly you cited their statements in the introduction not relevant to the study itself. Further, you state they rely on gray literature. Where, and in what manner do they rely upon gray literature? You state the proxies are valid because they perform well in the parts you deem important. Sir, your critique is flawed. Try again. Thanks.

I’ll answer a couple of your points.

1. “The fact that 2 separate time block preformed well and all other didn’t doesn’t validate anything.”

The point is those *same* blocks did not perform well in M&W. In fact, the proxies performed worse there than most of the interpolated blocks. At most, this suggests that first/last block is a “harder” validation test to pass. As well, it was misleading of M&W to imply that good early and late block performance was somehow related to the choice of first and last block, conveniently omitting that the effect was for interpolated blocks only, *not* the first and last blocks actually used by paleoclimatologists.

But in any event it’s clear their validation methodology does not apply to real paleoclimatology studies (Lasso overfitting/suboptimal screening, shorter validation window etc.)

2. Collaboration

Of course there are many statisticians working with climate scientists. Off the top of my head – Doug Nychka, PeterBloomfield, Richard Smith … This is the best way for statisticians to contribute – when statisticians try to do it on their own, the result is inevitably flawed.

3. Further, you state they rely on gray literature

I clearly stated that it was the background section that did so. Why should that be permissable?

Thanks for the reply DC. If M&W results on the first and last windows are very different to those of Mann et al, well just maybe that is because they are right and Mann is wrong.

pete- perhaps you could take the time to explain to those of who do not know, why it is wrong to take annual readings instead of 40 year groupings for the error bars? Does a 40 year smoothing give the most accurate result?

It’s not so much that one is better than the other. The problem is they’re different, and comparing one to the other is apples and oranges.

Also, the reason M&W get different results to Mann08 is that the Lasso is a really really bad reconstruction method. EIV scores about 0.1 on holdout-RMSE, which would be highly significant in Figure 10 for any of the noise types.

Of course we would have known that if they had followed what has become standard practice in the field, test your methods against synthetic proxy data generated by adding noise to GCM output. They even cite several papers which do just that, but didn’t bother to try. I wonder why?

The reason why you validate using the start and end blocks is simple: you want to test extrapolation. Interpolation is easy, especially for those null proxies that bring their own smoothness to the table, like those autoregression variants. Extrapolation on the other hand is hard, yet that’s what real paleoreconstructions are doing. It’s a test, remember?

pete:

I have no idea (why you think so?), but

1) Lasso is nonlinear. This means, e.g., that the result will depend on the pre-scaling of the input data. I could imagine ways in which that becomes a problem…

2) Does the Bayesian reconstruction actually use the Lasso for its update likelihood? I don’t see it in their code — but what does that WinBUGS code do (haven’t used anything but Linux for over a decade)?

Yep, that’s the kind of performance you would expect. How do they manage to push it up to 0.25…0.3?

1) The main reason I disapprove of the lasso is that subset selection wastes data. More data should improve a reconstruction; with the lasso a new proxy would have to be better than 58/1138 existing proxies to have any influence.

2) Their Bayesian reconstruction uses principal components regression.

I don’t think that the different time scales are the only reason for different error bar sizes, just that it’s a bad idea to compare the sizes of error bars at different time scales.

Is this journal, “Annals of Applied Statistics”, to be taken seriously when it accepts such editorializing and political posturing? It seems that it may be vying with E & E as the most discredited so called “science journal”.

Ian, I think this is an honest case of analysis that, on the face of matters, looks just fine. E&E publishes any nonsense that is sufficiently critical of AGW (in whatever way, from outright denial to nitpicking details that have no impact, but are blown up anyway). Don’t disparage a journal for one potential failure.

> why it is wrong to take annual readings instead of 40 year groupings for the error bars?

This means that MW2010 and M2008 are comparing apples and oranges, kind of similar to the difference between “confidence intervals” (interval for the mean) and “prediction intervals” (interval for obs). Climatologists use smoothing to intrinsically hypothesize that that there is an underlying physical process (kind of like when ENSO-adjusted temp. series is probably more physically reasonable to detect anthropogenic forcing effects) with the smoothed proxies that detects actual temps. instead of the raw data (not to mention some proxies don’t come at the annual resolution). MW2010 frowns on this method to instead “capture” the full uncertainty in the proxy sets (see this post by a statistician). There is subjectivity in choosing either, where the tradeoff occurs between adding physical skill and overfitting. I don’t think there’s anything wrong with either, in principle.

to go a little further, the smoothing rationale seem to be under-documented in the climatology literature (unless I’m missing a few earlier papers).

But I guess one can presuppose that the smoothing of proxies is a more realistic proxy for the global climate since the proxies are derived from local areas. Annual resolution for the proxies can contain local climatic variability that’s just a distraction. As the Rabett points out, it doesn’t seem like MW2010 calibrates against local temps. In essence, by trying hard to avoid overfitting from the smoothing, one can underfit the model. I call this whoring to uncertainty 🙂

pete – thanks for the reply. I just thought that since the proxies are all (as far as I know) annual readings such as tree rings or lake sediments they each can be determined to a precise year in the past, and the global temperatures are compared on an annual basis, perhaps you lose accuracy (or is it precision?) when you take 40 year averages in order to produce the error bars.

Presumably if we did a 40 year smoothing on the temperature record of the last 100 years it would show a lot less variation than when we show the data in an annual form – but would it mean missing out a lot of the useful information and giving a misleading impression?

Perhaps M&W did not make an elementary mistake in comparing apples with oranges, but instead deliberately wanted to show the annual error bars since they thought they were more precise (or is it accurate?) than those from 40 year averages.

In that case they would have said as much in their paper. They didn’t.

The proxies are mostly annual, but some have only decadal resolution.

I agree with DC’s comments on the strangeness of situating the paper in the historical / political context – for a purely science/stats paper, that seems questionable and not truly relevant. There have been many criticisms of the Wegman report and M&M. It seems out of place but if you’re going to do this kind of preamble, at least appear to do an objective job of it. This one

reeks to me of partisanship.

As such, one has to question the objectivity of the authors because their read of history is so slanted. Will they report data that supports their perspective and use methods that select the outcome they expect? I’m not claiming they did or did not but to start off in such a manner and tone does not inspire confidence in this reader…

Yes, but as I noted over at Deltoid, one gets the idea that the Wegman report was a strong source for M&W, even to the odd use of the word “artifact” in both. Wegman Report, The Sequel … but with more actual statistical analysis, albeit problem-laden.

I would be tempted to thumbnail your critique as roughly “the failure of McShane and Wyner to confirm the reliability of prior studies in this field is primarily due to their inexplicable decision to choose a naive methodology which is incapable of arriving at such an assessment.” There’s more to it than that of course, but I think the methodology seems to be the main vulnerability. It won’t matter if the authors messed up in their introduction if their methodology and conclusions hold up.

I’m particularly surprised by their determination that the proxies are not up to the task of reconstruction. If true that’s quite an extraordinary conclusion, and in any case it’s one that will no doubt be revisited with great enthusiasm by other researchers in this field.

I’ll take a look at the paper itself when the final text is published, and I’m looking forward to seeing how the authors address the apparent methodological issues.

Oooohhhh, Eduardo Zorita chimes in:

http://klimazwiebel.blogspot.com/2010/08/mcshane-and-wyner-on-climate.html#more

His conclusion is actually the same point I’ve been making a few times earlier: why did they not involve any climate scientists?

On the whole it’s a quite devastating critique, should make a few statisticians red in the face…

Zorita weighs in …

(H/T “Marco” over at Deltoid)

And due to the moderation queue H/T “Marco” over at Deepclimate….right above your post. (grin)

Zorita weighs in:

http://klimazwiebel.blogspot.com/2010/08/mcshane-and-wyner-on-climate.html

He’s not too enthusiastic either.

I’m still trying to figure out the paper itself, but I find it ironic that they rail against the lack of collaboration between climatologists and statisticians and then proceed to do their own analysis without collaborating with any climatologists.

As per Deltoid #243 and Deltoid #288, this is a a repeat of the Wegman’s meme.

It wasn’t true then, it isn’t true now, and serious statisticians know better, and actually follow ASA Ethical Guidelines, which say:

“A. Professionalism

1. Strive for relevance in statistical analyses. Typically, each study should be based on a competent understanding of the subject-matter issues…”

I do hope that a lot of the paper can be cleaned up. I know this will enrage some of us who like a little blood mixed into the drama. but there are just plain mistakes of history and method, within the background section. Also places where the language is non-neutral. And really….none of it matters….so would be much better if toned down, left out, and fixed.

Then the authors can get to their 30 year block holdout testing. We can debate that. That’s so much more worthy of a technical idea to engage on (even if wrong at least it’s a worthy concept to really crunch one!) Not the over-reliance on an ad hoc secondary report (Wegman).

I’ve been playing with the M&W code for Figure 17. Their ‘backcast error’ is a sort of ‘lazy man’s credible interval’ — they just graph 100 realisations of the backcast from the posterior distribution. I’ve certainly done that myself (it’s very easy to do in R), but for publication you probably want to make some attempt to properly calculate 95% credible intervals.

Their backcast envelope gives the impression of errors of +/–0.7dC. Calculating proper credible intervals would reduce that to +/–0.5dC. Smoothing the backcasts first, to make the errors comparable to the ones they are criticising, results in errors of +/–0.3dC.

This might be reduced further with a better reconstruction method. It’s still going to be larger than Mann08’s +/–0.1dC, but it’s nowhere near as dramatic as the misleading Figure 17.

Silly question:

Is it possible that the ever-rising backcast is an artifact of a cumulative error? They seem to use an equation of the sort “y(t) = B1*Proxies(t) + B2*y(t-1) + B3*y(t-3). If, say, the proxy values are roughly flat, and if the Betas sum to even slightly more than one, don’t we expect some spurious upward trend? I suppose there is something built in to guard against that.

pete, isn’t that the same code as for Figure 16?

BTW as you seem to have the code running, what about trying different numbers of PCs in the range 5-10? Would be great to see!

(I’ve also done bayesian — in Matlab. Yes, you need thousands of samples at least to get the 95% edges right.)

Sure… but to their defence*) they are doing the decadal and 30-years comparisons with averages over those time spans, i.e., correctly. Don’t you think?

*) They are using LaTeX, they cannot be all evil 😉

toto:

I would have used priors for b11 and b12 that exclude that possibility, but it looks like M&W’s Bayesian model leaves that possibility open. This would only effect individual realisations of the backcast though. Large enough (b11,b12) could cause runaway errors in either direction, which should cancel out when calculating the posterior mean. But it doesn’t look like any of the realisations have runaway errors.

Martin Vermeer has a good explanation for the warm backcast in a comment at Deltoid.

Gavin’s Pussycat:

I’ve only run the R code (the code and data archive contains intermediate results). Might fire up OpenBUGS later (I’m on Linux too), but don’t hold your breath 😉

It looks like they’re getting their posterior probabilities right (probability 1998/90s/last30 were warmest year/decade/30yrs) given their model. But the probabilities would be higher if the backcast wasn’t so warm.

Finally got some comparable error bars for Figure 17 by comparing 5yr smoothed M&W to the 5yr smoothed data from Mike’s website. Mann08 errors are +/–0.3dC, corrected M&W are +/–0.4dC. Caveat: M&W’s wider errors might be from using a poor reconstruction technique.

Yeah, I’d prefer more samples but I think in this case the autocorrelation can help us get the edges right if we wanted to plot credible intervals (I’d smooth the edges with the same filter I used on the data) .

I’m averaging credible interval widths over 850 years, so the 100 samples should be enough there.

Hmmm…. I would change that: M&W’s error isn’t in regressing against the global mean — Mann reports in the PNAS paper doing that too and getting the same result as by averaging individually regressed 5×5 degree grid cells — which is a logical consequence of linearity. So it isn’t that.

I would rather conjecture that the flaw in the M&W recon is in the regularization and choice of no. of PCs to take along (like DC also pointed out). Some of the excess “noisy” PCs are interacting with an arctic vs tropical PC in instrumental, and this gets projected on the axis tilt effect in pre-instrumental.

The Mann group (Rutherford, and also Wahl and Ammann) have spent a lot of effort sorting out optimal regularization for the TTLS technique they use with RegEM. I just don’t believe that M&W can have that kind of beginner’s luck without even trying.

BTW if you try let me know if OpenBUGS works for you with this code 🙂

The Bayesian stuff is just to get the uncertainty bounds. If you only want to know how the yellow line would change with different PCs then the Figure 14 code should be enough.

Pingback: The Hockey Schtick « The Policy Lass

re: PolyisTCOandbanned

You are likely familiar with the idea of “money-laundering”

whether in the formal sense of washing illegal funds, or in the more general sense of moving money around so you can’t tell where it came from, as in some political donations and the whole funding complex of thinktanks and and fronts,. i.e.e, like the way the Kochs’ helped Tea party get going.

A related one is “meme-laundering” in which mes are taken from grey sources and put forth in ones that might get peer-reviewed, where they can be quoted. The WR was very likely meme-laundering M&M material from various grey sources, under the guise of doing statistical analysis of MBH, about the one thing they didn’t do, i.e., the equivalent of Wahl,Amman(2007).

M&W appears to be doing some of the same for the WR…

I haven’t done an analysis yet of the various known Memes in M&W,

but a bunch are there, as well as Themes (ignoring well-known scholarship, science, math.)

Anyway, you seem to be thinking of this as a statistics paper that happens to have a bunch of extraneous junk in it, and it might be. it certainly has more of its own stat analysis than the WR. Given Wyner”s history of posts, getting the extra memes in may be an important goal…

Pingback: ------ THE SKY IS FALLING ------ - Page 1156 - TheEnvironmentSite.org Discussion and Information Forums

@Gavin’s Pussycat

Regarding extrapolation vs. interpolation. An interpolation sure seems like two extrapolations, one forward and the other backward, coupled together. Is there really no significance at all to the fact that proxy interpolations underperform the nulls in M&W?

Think of a rope tied to two stakes, one at each end. Another rope is tied at one end to one stake. Which rope is more constrained by the stakes?

The only significance of M&W’s results is for lasso+proxy versus lasso+noise. It says nothing about the information available in the proxies for proper methods.

Regarding extrapolation vs. interpolation. An interpolation sure seems like two extrapolations

I’m not sure it does, because in the interpolation case, each of your “two extrapolations” actually “sees” where the curve will pick up later on. E.g. if you interpolate 30 points, your “half-extrapolation” from the left (over 15 points) is not really a pure extrapolation, because the process “knows” the value of the 31st point (and beyond), and thus can make a reasonable guess at what the curve should look like over the first 15 points.

This is not the case in pure extrapolation, where the process can’t see where the curve is going later on (since there is no “later on”).

Is there really no significance at all to the fact that proxy interpolations underperform the nulls in M&W?

Well, there is. It shows that, when using the Lasso method, proxies are bad at resolving high-frequency information in the data (i.e. the detail of the wiggles around the trend). But I’m not sure that’s news.

That, and Pete’s point above – the Lasso’ed proxies have more variance, which handicaps their RMSE.

> dhogaza | August 19, 2010 at 1:37 pm

> Zorita weighs in …

>

> Rattus Norvegicus | August 19, 2010 at 1:59 pm

> Zorita weighs in:

Uh, oh–identical wording, differing only in punctuation ….

(grin)

Good question. No, an interpolation is not equivalent to two extrapolations. And no, I don’t believe the underperformance of proxies relative to nulls in this validation geometry has any significance. I would say it is misleading.

To see this, look at the simplest, dummiest “null” of all, the calibration-period mean. Assume, for the sake of argument, that instrumental temps have risen linearly from 0 to 0.75 over 150 years. Now, if you have a verification block at the beginning or the end of the period, length 30 years, the average temp over the verification period will be either 0.075 or 0.675, and the calibration period mean correspondingly 0.45 or 0.30.

Subtraction gives us the corresponding RMSe value: 0.375.

Now consider the M&W geometry. The RMSe value is 0.375 at the end positions of the blocks and vanishes for the central position (it won’t do that in reality, due to temps being “wiggly”). On average its absolute value will

be only half of 0.375.

If you translate this to the RE metric, it means that a value of 0.90 for the end-block method translates to a value of 0.60 for the interpolated-block method. 0.75 translates to zero. And this only due to a change in validation geometry!

And note (Figure 10 on p. 21) that the calibration-mean null performs identically in practice to the White Noise Proxy null. They are indistinguishible. The authors do remark on this:

Indeed.

The AR1(0.25) and AR1(0.4) pseudo-proxies also look rather similar, but, perhaps surprisingly, do even slightly worse.

I think I’ll stop there.

M&W cite (seemingly approvingly) M&M’s work but it seems to me that M&W’s reconstruction produces quite different results from M&M’s. Is this a valid comparison and am I looking at this correctly? If so then if M&W are correct wouldn’t M&M’s statistical skills be called into question just as much as Mann’s?

Pingback: Climate Blog and News Recap: 2010 08 20 « The Whiteboard

Since Mann et alia have yet to release their early statistical methodologies in a coherant

format, even with the Mann et al (2004) Corrigendum to MBH98, it’s tough to have

much faith in their follow up statistical work(s).

R.S. Brown:

Mann et al 2008 doesn’t use the short-centered PCA technique that has caused so much consternation among those of a certain denialist mindset.

Why should he bother to endlessly revisit a paper that’s 12 years old now?

That’s not how scientists work. They move forward. Even hockey teams replace their sticks after less than 12 years …

Deep Climate | August 20, 2010 at 1:46 am |

“I’ll answer a couple of your points.”

Thanks for getting back to me.

In your response you made 3 points. The first may be a valid concern. More examination may be required on this point. And as far as I can see the crux of the contention. I look forward to the back and forth that should come after publication.

Your second point referred to collaboration of statisticians. You said, “when statisticians try to do it on their own, the result is inevitably flawed.” That is a puzzling statement. Temps and proxies are represented by numbers. These numbers aren’t magical or mysterious. It doesn’t take a climatologist to apply those numbers to a formula or plot them on a graph. A time quantitative or qualitative series is just that. Climate scientists hold no unknown treasure of knowledge that others can’t see.

You third point is even more perplexing to me. Why shouldn’t their material in the background be allowed for gray material? How does any of the mentioned gray material effect the study or the study’s conclusion? I see no reference to the gray material as being used in trying to determine if proxies are reliable. As far as I’m concerned, the author’s for background could have stated they did this because they were bored. Or that they read mother goose and got inspiration from children’s literature. What possible difference could it make? Either their calculations are correct or not. Either their conclusions from the calculations are correct or not. Arguing because they made a mere mention of an inquiry invalidates the paper is an incredible stretch. I don’t like the book “Mein Campf”, but just because someone mentions it doesn’t invalidate all they have to say in totality.

Thanks again.

James

There is a difference between describing or assessing the grey litarature and *relying* on it. On every issue M&W side with M&M and Wegman.

The statistical analysis is flawed because it does not implement robust methodologies.

suyts wrote:

I am a former philosophy major and currently a computer programmer, and as such not an expert in this area, but if I may…

Temperatures and proxies are represented by numbers but they themselves are not numbers. They are aspects of a physical system. And a large part of the problem with a statistician attempting to do statistical analysis in climatology without the benefit of a climatologist will stem from his lack of knowledge of physics, chemistry and possibly even biology.

For example, Gavin’s Pussycat has already pointed out that in their analysis they did not take into proper account how polar amplification results in larger swings in temperature at higher latitudes — and that when estimating temperature variation at lower latitudes on the basis of proxies at higher latitudes one has to scale down the variation, that is recognize that the swings in temperature will be smaller at those lower latitudes. Likewise, a statistician will not automatically be aware of the difference between proxies of low resolution (which may be good at estimating average temperature on a decadal or even centennial scale) and proxies of high resolution that are good at estimating temperature at a yearly level.

For example, stoma count (where stoma are the holes in leaves where leaves engage in respiration) is apparently has a resolution no better than the lifetime of the plant. Or borehole temperatures with which one estimates surface temperatures, but where heat diffusion into the lower layers of the earth involves a progressive, laregely linear, loss of resolution with depth — which will also be dependent upon geological factors.

Lacking a background in climatology or plant physiology a statistician is unlikely to be aware of this. Judging from the evidence, the two authors were unaware of polar amplification and what this implies in terms of how one derives information from proxies at different latitudes. Likewise they appear to have been unaware of how different proxies will have different resolution — and therefore attempted to judge models based upon an annual resolution when the proxy-based model was not designed for this.

Furthermore, a statistician who is unfamiliar with climatology may be inclined to treate its problems as exercises in curve-fitting instead of as problems that are ultimately physics problems. Some suggestion of this exists in McShane and Wyner’s testing of proxy-based and noise-based approaches by means of problems in which the endpoints are known and the approach is used to fill in the middle.

A proxy-based approach does not actually work on the basis of such interpolation as the very point of using proxies is to be able to extrapolate beyond what is known — beyond the instrumental period. But a noised-based approach using the Lasso method often quite similar to or even reduces to simple linear interpolation — and given largely linear trends over an especially brief amount of time a noised-based largely interpolating method will “do well” in a way that is quite irrelevant — where the “best method” would simply be to connect the dots by means of a straight line.

Nothing mystical or magical about it. Simply a matter of background knowledge, familiarity and expertise.

*

suyts wrote:

There are a number of problems with their reliance on grey literature.

First, rather than attempting to familiarize themselves with the field by means of peer reviewed literature they rely upon grey literature, then there isn’t much of any assurance of quality to that information. Their sources may be prejudiced, slanted or simply uninformed — and there won’t be even the most basic checks in place to suggest otherwise. And having relied upon such grey literature for their background regarding the technical issues that are involved, it would seem that they haven’t done any of the homework prior to taking the test.

Second, such grey literature may appear to justify a given methodology when the proper methodology actually has to be justified by other means. The proper methodology is not a matter of pure mathematics but is largely grounded in empirical science, e.g., the temporal resolution of borehole temperature measurements at different depths as a proxy of surface temperature at earlier times.

Third, misinformation is misinformation. If they are including misinformation in their essay, then they are responsible for spreading that misinformation. And this is most certainly something that should be critiqued — irrespective of what it might have to say regarding their math.

Fourth, it suggests a certain lack of professional and academic standards that have been put in place in order to preserve a level of objectivity and quality — which suggests that they may be granting something else precedence. It further suggests that in their view it is Ok for others to grant such things precedence.

Fifth, it pollutes peer-reviewed literature by allowing material which has not been quality checked to enter peer-reviewed literature by means of a back door. Which means that at later points their peer-reviewed article may be referenced by other peer-reviewed literature for precisely those points that did not in fact pass peer review.

*

Anyway, as suggested by the very beginning of my response, I personally do not have any real expertise in this area. As a matter of fact, much of what I mentioned was based upon what I was able to gleen from the essay and the preceding comments. Nevertheless I hope it helped.

Unlike many statistical analyses, like stock market studies, physical sciences problems often have unforgiving data and ironclad conservation laws.

Example: suppose you give a small set of particle speed data from accelerators to a statistician, say {180, 181, 182, 183, 184, 185, 186, in 1000s of miles/sec). they tell you the mean speed is 182, with a std deviation of 2.16 and draw a nice 2SD interval, say [178.7, 187.3]. Looks good, but there’s this minor problem that 186.282 is the speed of light. it is *very* easy to generate statistical analyses that generate uncertainty limits that are simply physically impossible, or at least, very, very unlikely.

Average temperatures don’t change without some reason, whether we understand it or not. Some reasons (like huge volcanoes) have very fast effects, then disappear. IPCC AR4, Chapters 6 and 9 are worth reading …

Given bounds on volcanic/aerosols, solar, and GHG concentrations, some completely unknown cause is needed for the MWP to have been as warm as now…

That is, at least by eyeball, the higher edges of M&W’s MWP uncertainty ranges either require throwing out a lot of data or maybe bending conservation of energy. If M&W want to argue learnedly that those parts of the AR4 are simply wrong, and can get it through credible peer review, then more power to them.

John Mashey

Your example with particle speed data is precisely what M&W are all about.

Four of the seven speeds given to the statistician are themselves greater than the speed of light. For your example to be relevant to the discussion on the M&W paper, the person providing the speeds has to be a particle physicist or the like. The statistician, quite rightly therefore, assumes that the data is meaningful as it comes from a credible source. Of course the statistical analysis will generate uncertainty limits that are simply physically impossible, or at least, very, very unlikely. It is, after all, based on data that contains values themselves that are simply physically impossible, or at least, very, very unlikely.

No. Read it again. They are all less than the speed of light.

I am not statistically inclined so please indulge my unsophisticated questions. In simple terms, please answer / explain the following:

1. Does the algorithm used in MBH 98 generate ‘Hockey Stick’ shaped graphs from red noise (as alleged by McIntyre and McKittrick)?

2. Is it true that “[a] random series that are independent of global temperature are as effective or more effective than the proxies at predicting global annual temperatures in the instrumental period (as alleged by McShane & Wyner)?

3. Finally, why do some climatologists and statisticians so vehemently defend Mann’s work? If the answer to either of these questions is yes, Mann’s work is doomed.

Louis,

1) Yes but. The eigenvalues produced by the red noise test are an order of magnitude lower than the eigenvalues produced by Mann’s (admittedly incorrect) PCA methodology. As Wahl and Ammann (Climatic Change, 2007) when the original 1998 reconstruction is done with correctly centered PCA methodology you still get a hockey stick, albeit with a very slightly warmer 15th century. Subsequent work by other researchers using different methodologies has confirmed the basic correctness of the orginal result. The problem is that the hockey stick exists in the data, thus McIntyre’s relentless attempts to discredit any proxy which shows a sharp 20th century rise.

2) No. See the discussion here (esp. comments by pete). Apparently when the paper when published will appear with comments — highly unusual and perhaps indicative of the poor quality of the work. One of my pet peeves with this paper is that M&S did not benchmark their method against synthetic proxies to see how it performed when the answer is known. The lack of variability at any time scale and the apparent exaggeration of the long term Milankovich cycle driven downward trend make me think that their method has huge problems.

3) There has been lots of discussion of MBH98/99 in the literature, not all of it complementary. Most of this discussion is at this point is, excuse the pun, academic. Mann has changed his methodology for doing multiproxy reconstruction in the intervening years, so you might even say that Mann is not defending MBH98/99 — it is an old paper which contains an inconsequential error in the data processing and has been superseded by more recent work with better methodology.

“1) Yes but. The eigenvalues produced by the red noise test are an order of magnitude lower than the eigenvalues produced by…”

Very good: so you are happy with the method on the grounds that the eigenvalues are smaller for red noise. Nevertheless, I work in physics, and if one of my students tried to use such a model, I’d laugh and say, “Nice try. You can prove just about anything with those tricks.” You may think that I’m being narrow-minded, but it’s people like me who brought science to the point where it has such a high reputation, not people using PCA. By trying to lay claim to the credibility of hard science, while using the methods of soft science, climatologists are deceiving themselves and the public.

Except that “just about anything” would not include the results Mann got using PCA – let alone the subsequent work that avoided that method and got fairly similar results.

If, as you appear to be doing, you are trying to lay claim to the rigour of “hard science” whilst fallaciously arguing that a minor concern about the results of one method extend to all methods used, then you deceive yourself and the public.

Timothy Chase just to say that was the clearest explanation I’ve yet seen of the potential (and apparent) problems of this paper.

So just exactly how many angels can dance on the head of a pin?

At one point Professional Theologians discussed such things at length.

Proxy data, however carefully analysed can never offer anything other than a crude approximation of real climate conditions at any given period.

Thermometer readings from the early days of meterological observation can never offer anything other than a crude approximation of real climate conditions at that period.

You are all guessing.

Read some history maybe?

Take out the word crude and lose the first paragraph. The read “The Great Warming” by Brian Fagan. Report back with your findings.

You mean like history regarding, oh, the early years of powered flight, which involved a lot of educated guesswork compared to today’s highly-modeled, well-understood aircraft designs?

Those old airplanes did fly, you know.

You know James, I find that argument perplexing. Don’t you agree that the point of a scientific paper is to make a scientific argument, and that also the background material should serve that purpose and help the reader understand the argument — rather than independently amuse the reader?

Doesn’t it puzzle you at all that this, according to you irrelevant to the study or its conclusion, stuff is there? Don’t you wonder at all why? Have you seen any other scientific papers pulling this stunt? Where is your scepticism?

Pingback: Länkar 2010-08-21

Pingback: Doing it yourselves « Global Warming Blog

Gavin’s pussycat:

This is one of the mechanisms of “meme-laundering”, somewhat akin to the general form of money-laundering.

1) Start with various memes that simply don’t make it in the peer-reviewed journals that would really know better.

2) Slip some some of those memes into a paper mostly about other topics and get it through peer review or into something more credible.

3) Any that survive are now quoatable as peer reviwed or credible.

.

Much of the Wegman report is plausibly of this nature, as will be seen fairly soon.

4) See <a href="http://scienceblogs.com/deltoid/2010/08/a_new_hockey_stick_mcshane_and.php#comment-2740737"note at Deltoid for the way in which:

a) McK (almost certainly) fed some references to some economists.

b) who incorporated them in a paper on economics practices.

c) and then McI references that…

However, I broke link there. See for the right ones.

I wonder, have you put that level of effort into seeing if the hockey stick graph is accurate (I mean, other than its deliberate disregard of previous warming periods)?

[DC: “Deliberate disregard”? That sounds like an accusation of fraud. Please read the comment policy and avoid off topic remarks and false accusations. ]

> accusation of fraud

His specialty, at his blog.

Tskandalous.

PS, check the source from which he took his illustration posted 8/20; note the text in red font there, in case his accusation gets copypasted elsewhere: http://www.coastwatch.msu.edu/

http://www.agu.org/pubs/crossref/2010/2010GL043877.shtml

GEOPHYSICAL RESEARCH LETTERS, VOL. 37, L16704, 6 PP., 2010

doi:10.1029/2010GL043877

Ranking climate models by performance using actual values and anomalies: Implications for climate change impact assessments

So they are arguing for getting rid of the sh***y models. Good call.

[DC: Surely you mean “less robust”. ]

[DC: Deleted as per policy.]

Judging from other comments, you don’t have any problem with people accusing others of malfeasance (see the post immediately following mine, which you didn’t see fit to comment on), and I cannot see how on earth talking about the hockey stick graph is off topic on a post… about the hockey stick graph.

[DC: Hank Roberts presented actual evidence for his assertion. The reference to off-topic was a general reminder.]

John, of course you’re right.

It is good to be aware that the real audience for papers like this, like for the Wegman report, is not the scientific community. Its real audience are the ‘watties’, who, not understanding science and distrustful of scientists and learning, happily absorb anything to confirm their prejudices. The paper is clearly so designed, with just enough credible looking science to get the payload past the (non-climatology) reviewers.

You can expose the flaws in the science in the paper until you see green in the face — and I expect, based on my reading of the paper, that there will be lots of green faces — but in the end it matters but little, being aimed at the wrong audience. What DC has been doing with Wegman and Said is as valuable as it is for precisely this reason: plagiarism is obvious even to those without any science background. All it takes is English reading comprehension. And when committed by someone in an academic position, should have consequences.

I am confused by the following two statements:

From the paper :

“”A final serious problem with validating on only the front and back blocks is that the extreme characteristics of these blocks are widely known; it can only be speculated as to what extent the collection, scaling, and processing of the proxy data as well as modeling choices have been affected by this knowledge.””

From your blog:

“But not so fast. The proper comparison is really with the very first and very last blocks – the ones actually used in climate studies. And those two blocks tell a very different story.”

So, is there a problem with validating only on the first and last blocks? Or is there no problem? Please clarify this in the blog.

M and W assert that real proxies only out perform “null” proxies in early and late blocks, implying that was one reason the first and last windows were chosen. But their own analysis shows these two are “harder” than the interpolated windows.

The obvious inference is that real proxy reconstructions perform much better than M and W would lead you to believe. I find M and W highly misleading on this point.

The proxies beat some versions of noise, but empirical AR and brownian noise beat the proxies in almost all blocks (including early and late blocks).

Gavin’s pussycat:

“You can expose the flaws in the science in the paper until you see green in the face…”

I’m not sure if “You”was directed to me or as the generic “anybody.”

DC and others take fine care of the stat parts, are better at than I, and it doesn’t need me. I work on other stuff, as DC as alluded to…

John, “You” = “One”

DC,

Are you aware that you can add a Twitter button to your posts for instant Tweets? It can be added by going to the Dashboard > Appearance > Extras > Show a Twitter “Tweet Button” on my posts checkbox

[DC: I am now – coming soon.]

Figure 14 is as dodgy as 17.

Observation 1:

Does anyone else find it a bit suspicious that the Bayesian model in Section 5 is based on the green reconstruction in Figure 14?

Observation 2:

5 of the reconstructions are based on a single proxy PC; they all look similar to the red curve. The 6 lasso/stepwise reconstructions all look very weird.

The remaining 16 out of 27 reconstructions lie more-or-less between the blue and green curves. Remove the 11 questionable reconstructions and you’re left with a lot less model uncertainty; I expect that might be reduced further if they used the more appropriate inverse (proxies on temperature) calibration.

Sorry, those numbers don’t quite add up. There’s also an ‘intercept only’ model (just a horizontal line extending the calibration period mean back in time). That makes 15 out of 27 reconstructions that are actually interesting, and these range from the blue curve to the green curve.

Oh yes. If you’re interested in constraining the temperature contrast between recent and millennium, what you do is choose, or construct, an estimator that does so best. Not this one.

Also of possible interest: the three reconstructions calibrating 5, 10, and 20 proxy PCs on the NH mean temperature (as opposed to PCs of the instrumental record in the other 12) are all at the warmer end (i.e. they all look like the green curve).

pete, how do you run those scripts? I’m new to R.

Gavin,

just turn on R and do:

source("DIRECTORYTOFILES/R_fig14.txt")and check if the objects are loaded to the session by:

ls()you may or may not have packages installed, so say you are missing the MASS package, then install and load the package by doing:

install.packages("MASS"); library(MASS)You’ll have to edit the scripts a little.

There’s a line in each one with

setwd("xxx"), which is short for “set working directory”. You need to make sure it’s setting it to the correct directory on your computer, as opposed to McShane’s.apeescape, pete: thanks! That helps. Amazing…

BTW I tried to install ‘lars’ from the Denmark server and failed. Then from Berlin and succeeded. Are some CRAN servers incomplete?

BTW2 I’m not Gavin, just his furry pet 😉

Reproduced!

I also tried (5,5), (10,10) and (20,20). Of these, (5,5) lies very close to the Mann et al. 2008 solution. Also (20,20) lies at that general level but well under it after 1400AD. (10,10) again lies clearly above it similar to the proxy-pc = 10 (i.e., (inf,10)) solution.

Wondering how these regularization practices correspond to what Mann is doing, if at all.

Then I tried (5,5), (10,5), (20,5) in the same figure. Now that’s interesting! The differences among them are crisp linear pivots around 1850AD. This is the effect of the number of instrumental grid PCs. So it seems that filtering or not of the instrumental data in this way interacts with “something” that produces a linear long-term trend over the millenium. The Earth axis tilt change?

Kevan Hashemi stated on August 24, 2010 at 9:30 am :

Kevan is arguing primarily on the basis of his being an authority in physics. As such I decided to see whether he is in fact such an authority.

Kevan Hashemi is an electrical engineer — who may nevertheless teach physics at Brandeis University’s Martin A. Fisher School of Physics.

Please see:

Kevan Hashemi

Electrical Engineer

Martin A. Fisher School of Physics

http://www.brandeis.edu/departments/physics/people/staff.html

If so, I feel sorry for his students. He has argued that the greenhouse effect is untestable since we can’t performed repeated experiments — presumably under controlled conditions in a laboratory — and thus he tried to draw a comparison between it and creationism.

I quote:

In the very next comment Jim Lippard points out in part, “You have an impoverished view of science that excludes all historical sciences,” and likewise points out that climate science is testable.

*

Ironically enough, Kevan’s argument is similar to that made by creationists regarding evolutionary biology in which creationists argue for a hard and fast dichotomy between historical and observational sciences. Given their assumption they draw the conclusion that evolutionary biology amounts to little more than story telling and cannot actually claim to be scientific.

But as I point out here:

Repeatability and the “Dichotomy” Between Historical and Observational Sciences

http://axismundi.hostzi.com/0/008.php

… empirical sciences all involve a mix of historical and observational components.

*

We can actually perform controlled, repeatable experiments in the laboratory with viral and bacterial evolution. Under laboratory conditions we have observed the evolution of symbiosis between amoeba-x and a bacteria that nearly wiped a population out been then entered into a symbiotic union where neither the bacteria nor the amoeba are able to survive together. We have witnessed the repeatable evolution of strains of e. coli that developed the ability to metabolize citric acid. And we are able to observe and repeat the evolution of different strains of Spiegelman’s Monster under conditions in which nutrients were freely available and they slimmed down to become more efficient at replication — the smallest of which was roughly 50 ribonucleotides long — roughly the same length as what we have observed activated ribonucleotides to form in the presence of the mineral montmorillonite.

In contrast, we cannot perform controlled, repeatable experiments in stellar evolution, and when we observe the most distant galaxies we are looking at them not as they are now but as they existed roughly 13 billion years ago. Furthermore, even under so-called laboratory conditions we cannot eliminate all influences from outside the lab, and thus to a certain extent the conditions under which any experiment is performed are unique. Furthermore, when someone — such as myself — lapses into stating “we have observed” chances are this doesn’t include everyone and much more likely includes relatively few — who are nevertheless trusted (either as individuals or as institutions) by us due to our own largely unique personal history and experience.

Climate models differ in terms of their resolution, their approximations, and the physical processes that they model and thus in many of their projections. Nevertheless they are all built upon well-established principles physics, are testable for wellness of fit of a variety of trends and spatial patterns. And they have been used to make a variety of predictions — including for example that with an enhanced greenhouse effect the upper stratosphere will cool while the troposphere warms, that nights will warm more rapidly than days, and more generally the Hadley Cells and dry subtropics will expand, the continental interiors dry out, storm tracks move northward, the tropopause rise, changes in ocean circulation.

*